鲲鹏arm64架构下安装KubeSphere

官方参考文档: https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s/

在Kubernetes基础上最小化安装 KubeSphere

前提条件

官方参考文档: https://kubesphere.io/zh/docs/installing-on-kubernetes/introduction/prerequisites/

-

如需在 Kubernetes 上安装 KubeSphere 3.2.1,您的 Kubernetes 版本必须为:1.19.x、1.20.x、1.21.x 或 1.22.x(实验性支持)。

-

确保您的机器满足最低硬件要求:CPU > 1 核,内存 > 2 GB。

-

在安装之前,需要配置 Kubernetes 集群中的默认存储类型。

uname -a

显示架构如下:

Linux localhost.localdomain 4.14.0-115.el7a.0.1.aarch64 #1 SMP Sun Nov 25 20:54:21 UTC 2018 aarch64 aarch64 aarch64 GNU/Linuxkubectl version

显示版本如下:

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/arm64"}

Server Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:41:49Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/arm64"}free -g

显示内存如下:total used free shared buff/cache available

Mem: 127 48 43 1 34 57

Swap: 0 0 0kubectl get sc

显示存储默认 StorageClass如下:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

glusterfs (default) cluster.local/nfs-client-nfs-client-provisioner Delete Immediate true 24h

nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 23h

部署 KubeSphere

确保您的机器满足安装的前提条件之后,可以按照以下步骤安装 KubeSphere。

1 执行以下命令开始安装:

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

2 检查安装日志:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f报错如下:

2022-02-23T16:02:30+08:00 INFO : shell-operator latest

2022-02-23T16:02:30+08:00 INFO : HTTP SERVER Listening on 0.0.0.0:9115

2022-02-23T16:02:30+08:00 INFO : Use temporary dir: /tmp/shell-operator

2022-02-23T16:02:30+08:00 INFO : Initialize hooks manager ...

2022-02-23T16:02:30+08:00 INFO : Search and load hooks ...

2022-02-23T16:02:30+08:00 INFO : Load hook config from '/hooks/kubesphere/installRunner.py'

2022-02-23T16:02:31+08:00 INFO : Load hook config from '/hooks/kubesphere/schedule.sh'

2022-02-23T16:02:31+08:00 INFO : Initializing schedule manager ...

2022-02-23T16:02:31+08:00 INFO : KUBE Init Kubernetes client

2022-02-23T16:02:31+08:00 INFO : KUBE-INIT Kubernetes client is configured successfully

2022-02-23T16:02:31+08:00 INFO : MAIN: run main loop

2022-02-23T16:02:31+08:00 INFO : MAIN: add onStartup tasks

2022-02-23T16:02:31+08:00 INFO : QUEUE add all HookRun@OnStartup

2022-02-23T16:02:31+08:00 INFO : Running schedule manager ...

2022-02-23T16:02:31+08:00 INFO : MSTOR Create new metric shell_operator_live_ticks

2022-02-23T16:02:31+08:00 INFO : MSTOR Create new metric shell_operator_tasks_queue_length

2022-02-23T16:02:31+08:00 ERROR : error getting GVR for kind 'ClusterConfiguration': Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

2022-02-23T16:02:31+08:00 ERROR : Enable kube events for hooks error: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

2022-02-23T16:02:34+08:00 INFO : TASK_RUN Exit: program halts.

原因是8080端口,k8s默认不对外开放

开放8080端口

8080端口访问不了,k8s开放8080端口

https://www.cnblogs.com/liuxingxing/p/13399729.html

https://blog.csdn.net/qq_29274865/article/details/108953259

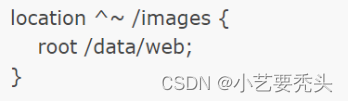

进入 cd /etc/kubernetes/manifests/vim kube-apiserver.yaml

添加

- --insecure-port=8080- --insecure-bind-address=0.0.0.0重启apiserver

docker restart apiserver容器id

重新执行上面的命令

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

3 使用 kubectl get pod --all-namespaces 查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行。如果是,请通过以下命令检查控制台的端口(默认为 30880):

kubectl get pod --all-namespaces

显示Error

kubesphere-system ks-installer-d8b656fb4-gb2qg 0/1 Error 0 27s查看日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f查看日志报错如下:

standard_init_linux.go:228: exec user process caused: exec format error

原因是arm64架构报错,运行不起来

https://hub.docker.com/

hub官方搜索下镜像有没有arm64的

根本就没有arm64架构的镜像,找了一圈,发现有个:kubespheredev/ks-installer:v3.0.0-arm64 试试

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl delete -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml下载镜像

docker pull kubespheredev/ks-installer:v3.0.0-arm64在使用rancher去修改deployments文件,或下载yaml文件去修改

由于我的k8s master节点是arm64架构的,kubesphere/ks-installer 官方没有arm64的镜像

kubesphere/ks-installer:v3.2.1修改为 kubespheredev/ks-installer:v3.0.0-arm64

节点选择指定master节点部署报错如下:

TASK [common : Kubesphere | Creating common component manifests] ***************

failed: [localhost] (item={'path': 'etcd', 'file': 'etcd.yaml'}) => {"ansible_loop_var": "item", "changed": false, "item": {"file": "etcd.yaml", "path": "etcd"}, "msg": "AnsibleUndefinedVariable: 'dict object' has no attribute 'etcdVolumeSize'"}

failed: [localhost] (item={'name': 'mysql', 'file': 'mysql.yaml'}) => {"ansible_loop_var": "item", "changed": false, "item": {"file": "mysql.yaml", "name": "mysql"}, "msg": "AnsibleUndefinedVariable: 'dict object' has no attribute 'mysqlVolumeSize'"}

failed: [localhost] (item={'path': 'redis', 'file': 'redis.yaml'}) => {"ansible_loop_var": "item", "changed": false, "item": {"file": "redis.yaml", "path": "redis"}, "msg": "AnsibleUndefinedVariable: 'dict object' has no attribute 'redisVolumSize'"}原因是版本对不上,使用v3.2.1版本,镜像确是v3.0.0-arm64

下面使用v3.0.0版本试试

使用v3.0.0版本

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yamlkubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml

在使用rancher去修改deployments文件或者下载v3.0.0的yaml文件,并修改

kubesphere-installer.yaml

cluster-configuration.yamlkubesphere-installer.yaml文件修改如下

由于我的k8s master节点是arm64架构的,kubesphere/ks-installer 官方没有arm64的镜像

kubesphere/ks-installer:v3.0.0修改为 kubespheredev/ks-installer:v3.0.0-arm64

节点选择指定master节点部署cluster-configuration.yaml文件修改

endpointIps: 192.168.xxx.xx 修改为k8s master节点IP

不报错,ks-installer也跑起来了,但是其他的没有arm64镜像,其他deployments都跑不起来 ks-controller-manager报错

把所有deployments镜像都换成arm64架构的,节点选择k8s master节点

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -fkubectl get pod --all-namespacesdocker pull kubespheredev/ks-installer:v3.0.0-arm64

docker pull bobsense/redis-arm64 要去掉挂载的存储pvc,不然报错

docker pull kubespheredev/ks-controller-manager:v3.2.1

docker pull kubespheredev/ks-console:v3.0.0-arm64

docker pull kubespheredev/ks-apiserver:v3.2.0除了ks-controller-manager,其他都运行起来了kubectl get svc/ks-console -n kubesphere-system

显示如下:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ks-console NodePort 10.1.4.225 <none> 80:30880/TCP 6h12m再次查看日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

最后显示如下:以为成功了

Console: http://192.168.xxx.xxx:30880

Account: admin

Password: P@88w0rd

kubectl logs ks-controller-manager-646b8fff9f-pd7w7 --namespace=kubesphere-system

ks-controller-manager报错如下:

W0224 11:36:55.643227 1 client_config.go:615] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

E0224 11:36:55.649703 1 server.go:101] failed to connect to ldap service, please check ldap status, error: factory is not able to fill the pool: LDAP Result Code 200 "Network Error": dial tcp: lookup openldap.kubesphere-system.svc on 10.1.0.10:53: no such host

登录报错: 可能是ks-controller-manager没成功运行

日志如下:

request to http://ks-apiserver.kubesphere-system.svc/oauth/token failed, reason: connect ECONNREFUSED 10.1.146.137:80

应该是openldap没启动成功的原因

查看StatefulSets的openldap日志

提示有2个StorageClasses,删除一个之后就运行成功了

persistentvolumeclaims "openldap-pvc-openldap-0" is forbidden: Internal error occurred: 2 default StorageClasses were found

删除StorageClasses

查看

kubectl get sc

显示如下:之前有2条记录,是因为我删除了一条

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 40h删除

kubectl delete sc glusterfs

删除StorageClasses 后,只有一个StorageClasses 了, openldap正常运行了,ks-controller-manager也正常运行了,有希望了

所有pod都正常运行了,但是登录还是一样的有问题.查看日志

ks-apiserver-556f698dfb-5p2fc

日志如下:

E0225 10:40:17.460271 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmApplication: failed to list *v1alpha1.HelmApplication: the server could not find the requested resource (get helmapplications.application.kubesphere.io)

E0225 10:40:17.548278 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmRepo: failed to list *v1alpha1.HelmRepo: the server could not find the requested resource (get helmrepos.application.kubesphere.io)

E0225 10:40:17.867914 1 reflector.go:138] pkg/models/openpitrix/interface.go:89: Failed to watch *v1alpha1.HelmCategory: failed to list *v1alpha1.HelmCategory: the server could not find the requested resource (get helmcategories.application.kubesphere.io)

E0225 10:40:18.779136 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmRelease: failed to list *v1alpha1.HelmRelease: the server could not find the requested resource (get helmreleases.application.kubesphere.io)

E0225 10:40:19.870229 1 reflector.go:138] pkg/models/openpitrix/interface.go:90: Failed to watch *v1alpha1.HelmRepo: failed to list *v1alpha1.HelmRepo: the server could not find the requested resource (get helmrepos.application.kubesphere.io)

E0225 10:40:20.747617 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmCategory: failed to list *v1alpha1.HelmCategory: the server could not find the requested resource (get helmcategories.application.kubesphere.io)

E0225 10:40:23.130177 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha1.HelmApplicationVersion: failed to list *v1alpha1.HelmApplicationVersion: the server could not find the requested resource (get helmapplicationversions.application.kubesphere.io)

ks-console-65f46d7649-5zt8c

日志如下:

<-- GET / 2022/02/25T10:41:28.642

{ UnauthorizedError: Not Login

at Object.throw (/opt/kubesphere/console/server/server.js:31701:11)

at getCurrentUser (/opt/kubesphere/console/server/server.js:9037:14)

at renderView (/opt/kubesphere/console/server/server.js:23231:46)

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:70183:16

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:77986:37

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:70183:16

at dispatch (/opt/kubesphere/console/server/server.js:6870:32)

at next (/opt/kubesphere/console/server/server.js:6871:18)

at /opt/kubesphere/console/server/server.js:77986:37

at dispatch (/opt/kubesphere/console/server/server.js:6870:32) message: 'Not Login' }

--> GET / 302 6ms 43b 2022/02/25T10:41:28.648

<-- GET /login 2022/02/25T10:41:28.649

{ FetchError: request to http://ks-apiserver.kubesphere-system.svc/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: connect ECONNREFUSED 10.1.144.129:80

at ClientRequest.<anonymous> (/opt/kubesphere/console/server/server.js:80604:11)

at ClientRequest.emit (events.js:198:13)

at Socket.socketErrorListener (_http_client.js:392:9)

at Socket.emit (events.js:198:13)

at emitErrorNT (internal/streams/destroy.js:91:8)

at emitErrorAndCloseNT (internal/streams/destroy.js:59:3)

at process._tickCallback (internal/process/next_tick.js:63:19)

message:

'request to http://ks-apiserver.kubesphere-system.svc/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: connect ECONNREFUSED 10.1.144.129:80',

type: 'system',

errno: 'ECONNREFUSED',

code: 'ECONNREFUSED' }

--> GET /login 200 7ms 14.82kb 2022/02/25T10:41:28.656

ks-controller-manager-548545f4b4-w4wmx

日志如下:

E0225 10:41:41.633013 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:41.634349 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:41.722377 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:42.636612 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:42.875652 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:42.964819 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:45.177641 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:45.327393 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:46.164454 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:49.011152 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:41:50.299769 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:41:50.851105 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:56.831265 1 helm_category_controller.go:158] get helm category: ctg-uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:41:56.923487 1 helm_category_controller.go:176] create helm category: uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:41:58.696406 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

E0225 10:41:59.876998 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.Group: failed to list *v1alpha2.Group: the server could not find the requested resource (get groups.iam.kubesphere.io)

E0225 10:42:01.266422 1 reflector.go:138] github.com/kubernetes-csi/external-snapshotter/client/v4/informers/externalversions/factory.go:117: Failed to watch *v1.VolumeSnapshotClass: failed to list *v1.VolumeSnapshotClass: the server could not find the requested resource (get volumesnapshotclasses.snapshot.storage.k8s.io)

E0225 10:42:11.724869 1 helm_category_controller.go:158] get helm category: ctg-uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:42:11.929837 1 helm_category_controller.go:176] create helm category: uncategorized failed, error: no matches for kind "HelmCategory" in version "application.kubesphere.io/v1alpha1"

E0225 10:42:12.355338 1 reflector.go:138] pkg/client/informers/externalversions/factory.go:128: Failed to watch *v1alpha2.GroupBinding: failed to list *v1alpha2.GroupBinding: the server could not find the requested resource (get groupbindings.iam.kubesphere.io)

I0225 10:42:15.625073 1 leaderelection.go:253] successfully acquired lease kubesphere-system/ks-controller-manager-leader-election

I0225 10:42:15.625301 1 globalrolebinding_controller.go:122] Starting GlobalRoleBinding controller

I0225 10:42:15.625343 1 globalrolebinding_controller.go:125] Waiting for informer caches to sync

I0225 10:42:15.625365 1 globalrolebinding_controller.go:137] Starting workers

I0225 10:42:15.625351 1 snapshotclass_controller.go:102] Waiting for informer cache to sync.

I0225 10:42:15.625391 1 globalrolebinding_controller.go:143] Started workers

I0225 10:42:15.625380 1 capability_controller.go:110] Waiting for informer caches to sync

I0225 10:42:15.625449 1 capability_controller.go:123] Started workers

I0225 10:42:15.625447 1 basecontroller.go:59] Starting controller: loginrecord-controller

I0225 10:42:15.625478 1 globalrolebinding_controller.go:205] Successfully synced key:authenticated

I0225 10:42:15.625481 1 basecontroller.go:60] Waiting for informer caches to sync for: loginrecord-controller

I0225 10:42:15.625488 1 clusterrolebinding_controller.go:114] Starting ClusterRoleBinding controller

I0225 10:42:15.625546 1 clusterrolebinding_controller.go:117] Waiting for informer caches to sync

I0225 10:42:15.625540 1 basecontroller.go:59] Starting controller: group-controller

I0225 10:42:15.625515 1 basecontroller.go:59] Starting controller: groupbinding-controller

I0225 10:42:15.625596 1 basecontroller.go:60] Waiting for informer caches to sync for: group-controller

I0225 10:42:15.625615 1 basecontroller.go:60] Waiting for informer caches to sync for: groupbinding-controller

I0225 10:42:15.625579 1 clusterrolebinding_controller.go:122] Starting workers

I0225 10:42:15.625480 1 certificatesigningrequest_controller.go:109] Starting CSR controller

I0225 10:42:15.625681 1 certificatesigningrequest_controller.go:112] Waiting for csrInformer caches to sync

就差那么一点点就可以了,等下次解决了在补上吧

参考链接:

https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s/

https://www.yuque.com/leifengyang/oncloud/gz1sls#BIxCW

![[分子指纹]关于smile结构的理解](https://img-blog.csdnimg.cn/direct/8ecaf0943f7241f3affec9e1a51de88d.png)