| 时间 | 版本 | 修改人 | 描述 |

|---|---|---|---|

| 2024年7月4日09:48:09 | V0.1 | 宋全恒 | 新建文档 |

简介

由于最近需要向vLLM上集成功能,因此,需要能够调试自己的仓库LLMs_Inference,该文档记录了源码编译的完整的过程。

参考链接如下:

- Build from source

正常简单执行下述的代码,即可完成源码的编译安装

git clone https://github.com/vllm-project/vllm.git

cd vllm

# export VLLM_INSTALL_PUNICA_KERNELS=1 # optionally build for multi-LoRA capability

pip install -e . # This may take 5-10 minutes.

但实际上还是比较麻烦的。因为仓库LLMs_Inference是从vllm仓库fork出来的,所以理论上应该是一样的。

仓库介绍

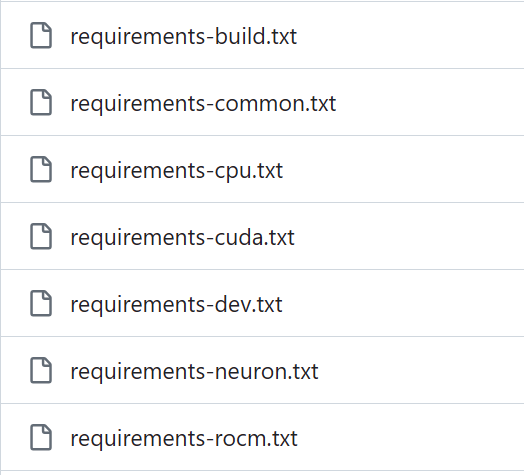

仓库中有多个依赖环境,

这些文件通常用于记录项目的依赖关系,以便在特定环境中进行安装和配置。

-

requirements.txt:一般用于列出项目所需的所有依赖项及其版本要求。通过在该文件中指定所需的库和版本,方便一次性安装所有依赖。 -

requirements-cpu.txt、requirements-cuda.txt、requirements-rocm.txt、requirements-neuron.txt:这些文件可能是针对不同的硬件或计算环境的特定依赖列表。例如,requirements-cuda.txt可能包含与 CUDA(Compute Unified Device Architecture,一种并行计算平台和编程模型)相关的依赖;requirements-rocm.txt可能涉及 ROCm(Radeon Open Compute platform,AMD 的开源计算平台)的依赖;requirements-neuron.txt也许和特定的神经元芯片或相关技术的依赖有关。

而 requirements-dev.txt 通常用于开发环境所需的额外依赖项,这些依赖可能不是项目在运行时必需的,但对于开发、测试、构建等过程是需要的。

源码编译安装 vLLM 是否需要安装所有这些依赖文件,取决于你的具体需求和使用场景。

如果你计划在特定的硬件环境(如使用 CUDA、ROCM 等)中运行 vLLM 或进行相关开发,那么可能需要根据相应的环境安装对应的依赖文件。

以安装 vLLM 为例,通常需要先创建 conda 环境并激活,然后查看 requirements.txt 中指定的 PyTorch 版本等依赖信息,再进行安装。

vllm开发环境准备

直接在宿主机上安装

解决torch依赖下载问题

(llms_inference) yuzailiang@ubuntu:/mnt/self-define/sunning/lmdeploy/LLMs_Inference$ python -c "import torch; print('device count:',torch.cuda.device_count(), 'available: ', torch.cuda.is_available())"

Traceback (most recent call last):File "<string>", line 1, in <module>File "/home/yuzailiang/anaconda3/envs/llms_inference/lib/python3.9/site-packages/torch/__init__.py", line 237, in <module>from torch._C import * # noqa: F403

ImportError: /home/yuzailiang/anaconda3/envs/llms_inference/lib/python3.9/site-packages/torch/lib/../../nvidia/cusparse/lib/libcusparse.so.12: undefined symbol: __nvJitLinkAddData_12_1, version libnvJitLink.so.12

上面是直接安装时发现虽然安装了torch==2.3.0但是,无法使用gpu。

解决方式:

单独安装torch依赖

pip install torch==2.3.0 torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

安装之后,验证torch可以正确的驱动CUDA调用GPU

(llms_inference) yuzailiang@ubuntu:/mnt/self-define/sunning/lmdeploy/LLMs_Inference$ python -c "import torch; print('device count:',torch.cuda.device_count(), 'available: ', torch.cuda.is_available())"

device count: 8 available: True

继续执行pip install -e .

问题还是存在

packages/torch/__init__.py", line 237, in <module>from torch._C import * # noqa: F403

ImportError: /home/yuzailiang/anaconda3/envs/llms_inference/lib/python3.9/site-packages/torch/lib/../../nvidia/cusparse/lib/libcusparse.so.12: undefined symbol: __nvJitLinkAddData_12_1, version libnvJitLink.so.12

尝试使用cu121-Couldn’t find CUDA library root.

pip install torch==2.3.0 torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

Building wheels for collected packages: vllmBuilding editable for vllm (pyproject.toml) ... errorerror: subprocess-exited-with-error× Building editable for vllm (pyproject.toml) did not run successfully.│ exit code: 1╰─> [139 lines of output]running editable_wheelcreating /tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-infowriting /tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/PKG-INFOwriting dependency_links to /tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/dependency_links.txtwriting requirements to /tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/requires.txtwriting top-level names to /tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/top_level.txtwriting manifest file '/tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/SOURCES.txt'reading manifest file '/tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/SOURCES.txt'reading manifest template 'MANIFEST.in'adding license file 'LICENSE'writing manifest file '/tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm.egg-info/SOURCES.txt'creating '/tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm-0.4.2+cu120.dist-info'creating /tmp/pip-wheel-c7m73v0l/.tmp-t6j0dz53/vllm-0.4.2+cu120.dist-info/WHEELrunning build_pyrunning build_ext-- The CXX compiler identification is GNU 9.4.0-- Detecting CXX compiler ABI info-- Detecting CXX compiler ABI info - done-- Check for working CXX compiler: /usr/bin/c++ - skipped-- Detecting CXX compile features-- Detecting CXX compile features - done-- Build type: RelWithDebInfo-- Target device: cuda-- Found Python: /home/yuzailiang/anaconda3/envs/llms_inference/bin/python3.9 (found version "3.9.19") found components: Interpreter Development.Module-- Found python matching: /home/yuzailiang/anaconda3/envs/llms_inference/bin/python3.9.-- Found CUDA: /usr/local/cuda-12.0 (found version "12.0")CMake Error at /tmp/pip-build-env-xxhrqd7n/overlay/lib/python3.9/site-packages/cmake/data/share/cmake-3.30/Modules/Internal/CMakeCUDAFindToolkit.cmake:148 (message):Couldn't find CUDA library root.Call Stack (most recent call first):subprocess.CalledProcessError: Command '['cmake', '/mnt/self-define/sunning/lmdeploy/LLMs_Inference', '-G', 'Ninja', '-DCMAKE_BUILD_TYPE=RelWithDebInfo', '-DCMAKE_LIBRARY_OUTPUT_DIRECTORY=/tmp/tmpwkyqp9r6.build-lib/vllm', '-DCMAKE_ARCHIVE_OUTPUT_DIRECTORY=/tmp/tmpuvdjn65m.build-temp', '-DVLLM_TARGET_DEVICE=cuda', '-DCMAKE_CXX_COMPILER_LAUNCHER=ccache', '-DCMAKE_CUDA_COMPILER_LAUNCHER=ccache', '-DVLLM_PYTHON_EXECUTABLE=/home/yuzailiang/anaconda3/envs/llms_inference/bin/python3.9', '-DNVCC_THREADS=1', '-DCMAKE_JOB_POOL_COMPILE:STRING=compile', '-DCMAKE_JOB_POOLS:STRING=compile=256']' returned non-zero exit status 1.

上述是非常复杂的环境,因为显示正在使用的cu120,即cuda 12.0.

Building wheels for collected packages: vllmBuilding editable for vllm (pyproject.toml) ... errorsubprocess.CalledProcessError: Command '['cmake', '/mnt/self-define/sunning/lmdeploy/LLMs_Inference', '-G', 'Ninja', '-DCMAKE_BUILD_TYPE=RelWithDebInfo', '-DCMAKE_LIBRARY_OUTPUT_DIRECTORY=/tmp/tmplnbtei_9.build-lib/vllm', '-DCMAKE_ARCHIVE_OUTPUT_DIRECTORY=/tmp/tmpdu5xwhxm.build-temp', '-DVLLM_TARGET_DEVICE=cuda', '-DCMAKE_CXX_COMPILER_LAUNCHER=ccache', '-DCMAKE_CUDA_COMPILER_LAUNCHER=ccache', '-DVLLM_PYTHON_EXECUTABLE=/home/yuzailiang/anaconda3/envs/llms_inference/bin/python3.9', '-DNVCC_THREADS=1', '-DCMAKE_JOB_POOL_COMPILE:STRING=compile', '-DCMAKE_JOB_POOLS:STRING=compile=256']' returned non-zero exit status 1.

但是nvidia-smi显示的cuda版本为12.4,就很奇怪。

(base) yuzailiang@ubuntu:/mnt/self-define/sunning/lmdeploy/LLMs_Inference$ nvidia-smi

Tue Jul 9 09:02:46 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.14 Driver Version: 550.54.14 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+---------------------

而在 /usr/local目录下,并没有这个cuda12.4.

(base) yuzailiang@ubuntu:/usr/local$ ll | grep cuda

lrwxrwxrwx 1 root root 22 Jul 8 05:22 cuda -> /etc/alternatives/cuda/

lrwxrwxrwx 1 root root 25 Jul 8 05:22 cuda-12 -> /etc/alternatives/cuda-12/

drwxr-xr-x 17 root root 4096 Jun 25 08:42 cuda-12.0/

drwxr-xr-x 15 root root 4096 Jul 8 05:22 cuda-12.1/

放弃宿主环境改用容器

最终还是花费了几天之后,改用镜像来进行开发环境的搭建。

docker run -d --name smoothquant --gpus all -v /mnt/self-define:/mnt/self-define -p 8022:22 -it llm_inference:v1.0

基本思路,就是启动容器,将必要的目录挂载进去,并且在容器中部署sshd服务,使用vscode将容器当成一个独立的Linux机器,以实现断点调试开发的功能。

具体过程参见 镜像启动添加sshd服务。

基本过程如下所示:

1. 启动容器,赋予全部PGU,以及适当的挂载目录和端口映射。

2. 容器安装sshd服务,(ubuntu和centos不一样)

3. 配置sshd服务,修改root密码

4. 启动sshd服务

5. vscode直接连接容器(作为远程linux服务器)。

6. 搭建调试环境和断点。 参见 [05-16 周四 vscode 搭建远程调试环境_python vscode server 远程调试-CSDN博客](https://blog.csdn.net/lk142500/article/details/138969211?spm=1001.2014.3001.5502)

编辑CMakeLists.txt

LLMs_Inference/CMakeLists.txt里的地178行 FetchContent_Declare(

cutlass

GIT_REPOSITORY https://github.com/nvidia/cutlass.git

# CUTLASS 3.5.0

GIT_TAG 7d49e6c7e2f8896c47f586706e67e1fb215529dc

)

改成

FetchContent_Declare(

cutlass

SOURCE_DIR /cutlass#GIT_REPOSITORY https://github.com/nvidia/cutlass.git

# CUTLASS 3.5.0

#GIT_TAG 7d49e6c7e2f8896c47f586706e67e1fb215529dc

)

执行源码安装

pip install -e .

root@145206f3e691:/mnt/self-define/sunning/lmdeploy/LLMs_Inference# pip install -e .

Obtaining file:///mnt/self-define/sunning/lmdeploy/LLMs_InferenceInstalling build dependencies ... doneChecking if build backend supports build_editable ... doneGetting requirements to build wheel ... donePreparing metadata (pyproject.toml) ... done

Requirement already satisfied: psutil in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (5.9.8)

Requirement already satisfied: prometheus-fastapi-instrumentator>=7.0.0 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (7.0.0)

Requirement already satisfied: aiohttp in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (3.9.3)

Requirement already satisfied: outlines==0.0.34 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.0.34)

Requirement already satisfied: lm-format-enforcer==0.10.1 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.10.1)

Requirement already satisfied: tokenizers>=0.19.1 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.19.1)

Requirement already satisfied: ninja in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (1.11.1.1)

Requirement already satisfied: pydantic>=2.0 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (2.6.4)

Requirement already satisfied: prometheus-client>=0.18.0 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.20.0)

Requirement already satisfied: cmake>=3.21 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (3.28.4)

Requirement already satisfied: tiktoken>=0.6.0 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.7.0)

Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (1.26.4)

Requirement already satisfied: openai in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (1.31.0)

Requirement already satisfied: vllm-flash-attn==2.5.8.post2 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (2.5.8.post2)

Requirement already satisfied: fastapi in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.110.0)

Requirement already satisfied: uvicorn[standard] in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.29.0)

Requirement already satisfied: torch==2.3.0 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (2.3.0)

Requirement already satisfied: ray>=2.9 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (2.10.0)

Requirement already satisfied: transformers>=4.40.0 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (4.42.3)

Requirement already satisfied: py-cpuinfo in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (9.0.0)

Requirement already satisfied: xformers==0.0.26.post1 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.0.26.post1)

Requirement already satisfied: sentencepiece in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (0.2.0)

Requirement already satisfied: vllm-nccl-cu12<2.19,>=2.18 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (2.18.1.0.4.0)

Requirement already satisfied: typing-extensions in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (4.10.0)

Requirement already satisfied: nvidia-ml-py in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (12.555.43)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (2.31.0)

Requirement already satisfied: filelock>=3.10.4 in /usr/local/lib/python3.10/dist-packages (from vllm==0.4.2+cu124) (3.13.1)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from lm-format-enforcer==0.10.1->vllm==0.4.2+cu124) (6.0.1)

Requirement already satisfied: interegular>=0.3.2 in /usr/local/lib/python3.10/dist-packages (from lm-format-enforcer==0.10.1->vllm==0.4.2+cu124) (0.3.3)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from lm-format-enforcer==0.10.1->vllm==0.4.2+cu124) (24.0)

Requirement already satisfied: nest-asyncio in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (1.6.0)

Requirement already satisfied: numba in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (0.59.1)

Requirement already satisfied: cloudpickle in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (3.0.0)

Requirement already satisfied: joblib in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (1.3.2)

Requirement already satisfied: scipy in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (1.12.0)

Requirement already satisfied: diskcache in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (5.6.3)

Requirement already satisfied: referencing in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (0.34.0)

Requirement already satisfied: jinja2 in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (3.1.3)

Requirement already satisfied: lark in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (1.1.9)

Requirement already satisfied: jsonschema in /usr/local/lib/python3.10/dist-packages (from outlines==0.0.34->vllm==0.4.2+cu124) (4.21.1)

Requirement already satisfied: nvidia-curand-cu12==10.3.2.106 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (10.3.2.106)

Requirement already satisfied: nvidia-cufft-cu12==11.0.2.54 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (11.0.2.54)

Requirement already satisfied: nvidia-cudnn-cu12==8.9.2.26 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (8.9.2.26)

Requirement already satisfied: nvidia-cuda-runtime-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (12.1.105)

Requirement already satisfied: triton==2.3.0 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (2.3.0)

Requirement already satisfied: sympy in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (1.12)

Requirement already satisfied: nvidia-cuda-nvrtc-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (12.1.105)

Requirement already satisfied: fsspec in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (2024.2.0)

Requirement already satisfied: nvidia-cuda-cupti-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (12.1.105)

Requirement already satisfied: nvidia-cusolver-cu12==11.4.5.107 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (11.4.5.107)

Requirement already satisfied: networkx in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (3.2.1)

Requirement already satisfied: nvidia-nvtx-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (12.1.105)

Requirement already satisfied: nvidia-cublas-cu12==12.1.3.1 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (12.1.3.1)

Requirement already satisfied: nvidia-nccl-cu12==2.20.5 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (2.20.5)

Requirement already satisfied: nvidia-cusparse-cu12==12.1.0.106 in /usr/local/lib/python3.10/dist-packages (from torch==2.3.0->vllm==0.4.2+cu124) (12.1.0.106)

Requirement already satisfied: nvidia-nvjitlink-cu12 in /usr/local/lib/python3.10/dist-packages (from nvidia-cusolver-cu12==11.4.5.107->torch==2.3.0->vllm==0.4.2+cu124) (12.4.99)

Requirement already satisfied: starlette<1.0.0,>=0.30.0 in /usr/local/lib/python3.10/dist-packages (from prometheus-fastapi-instrumentator>=7.0.0->vllm==0.4.2+cu124) (0.36.3)

Requirement already satisfied: annotated-types>=0.4.0 in /usr/local/lib/python3.10/dist-packages (from pydantic>=2.0->vllm==0.4.2+cu124) (0.6.0)

Requirement already satisfied: pydantic-core==2.16.3 in /usr/local/lib/python3.10/dist-packages (from pydantic>=2.0->vllm==0.4.2+cu124) (2.16.3)

Requirement already satisfied: msgpack<2.0.0,>=1.0.0 in /usr/local/lib/python3.10/dist-packages (from ray>=2.9->vllm==0.4.2+cu124) (1.0.8)

Requirement already satisfied: aiosignal in /usr/local/lib/python3.10/dist-packages (from ray>=2.9->vllm==0.4.2+cu124) (1.3.1)

Requirement already satisfied: frozenlist in /usr/local/lib/python3.10/dist-packages (from ray>=2.9->vllm==0.4.2+cu124) (1.4.1)

Requirement already satisfied: protobuf!=3.19.5,>=3.15.3 in /usr/local/lib/python3.10/dist-packages (from ray>=2.9->vllm==0.4.2+cu124) (5.26.1)

Requirement already satisfied: click>=7.0 in /usr/local/lib/python3.10/dist-packages (from ray>=2.9->vllm==0.4.2+cu124) (8.1.7)

Requirement already satisfied: regex>=2022.1.18 in /usr/local/lib/python3.10/dist-packages (from tiktoken>=0.6.0->vllm==0.4.2+cu124) (2023.12.25)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->vllm==0.4.2+cu124) (2.2.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->vllm==0.4.2+cu124) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->vllm==0.4.2+cu124) (3.6)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->vllm==0.4.2+cu124) (2024.2.2)

Requirement already satisfied: huggingface-hub<1.0,>=0.16.4 in /usr/local/lib/python3.10/dist-packages (from tokenizers>=0.19.1->vllm==0.4.2+cu124) (0.23.4)

Requirement already satisfied: safetensors>=0.4.1 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.40.0->vllm==0.4.2+cu124) (0.4.2)

Requirement already satisfied: tqdm>=4.27 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.40.0->vllm==0.4.2+cu124) (4.66.2)

Requirement already satisfied: yarl<2.0,>=1.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->vllm==0.4.2+cu124) (1.9.4)

Requirement already satisfied: async-timeout<5.0,>=4.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->vllm==0.4.2+cu124) (4.0.3)

Requirement already satisfied: attrs>=17.3.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->vllm==0.4.2+cu124) (23.2.0)

Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.10/dist-packages (from aiohttp->vllm==0.4.2+cu124) (6.0.5)

Requirement already satisfied: httpx<1,>=0.23.0 in /usr/local/lib/python3.10/dist-packages (from openai->vllm==0.4.2+cu124) (0.27.0)

Requirement already satisfied: anyio<5,>=3.5.0 in /usr/local/lib/python3.10/dist-packages (from openai->vllm==0.4.2+cu124) (4.3.0)

Requirement already satisfied: distro<2,>=1.7.0 in /usr/local/lib/python3.10/dist-packages (from openai->vllm==0.4.2+cu124) (1.9.0)

Requirement already satisfied: sniffio in /usr/local/lib/python3.10/dist-packages (from openai->vllm==0.4.2+cu124) (1.3.1)

Requirement already satisfied: h11>=0.8 in /usr/local/lib/python3.10/dist-packages (from uvicorn[standard]->vllm==0.4.2+cu124) (0.14.0)

Requirement already satisfied: websockets>=10.4 in /usr/local/lib/python3.10/dist-packages (from uvicorn[standard]->vllm==0.4.2+cu124) (12.0)

Requirement already satisfied: python-dotenv>=0.13 in /usr/local/lib/python3.10/dist-packages (from uvicorn[standard]->vllm==0.4.2+cu124) (1.0.1)

Requirement already satisfied: httptools>=0.5.0 in /usr/local/lib/python3.10/dist-packages (from uvicorn[standard]->vllm==0.4.2+cu124) (0.6.1)

Requirement already satisfied: watchfiles>=0.13 in /usr/local/lib/python3.10/dist-packages (from uvicorn[standard]->vllm==0.4.2+cu124) (0.21.0)

Requirement already satisfied: uvloop!=0.15.0,!=0.15.1,>=0.14.0 in /usr/local/lib/python3.10/dist-packages (from uvicorn[standard]->vllm==0.4.2+cu124) (0.19.0)

Requirement already satisfied: exceptiongroup>=1.0.2 in /usr/local/lib/python3.10/dist-packages (from anyio<5,>=3.5.0->openai->vllm==0.4.2+cu124) (1.2.0)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.10/dist-packages (from httpx<1,>=0.23.0->openai->vllm==0.4.2+cu124) (1.0.5)

Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.10/dist-packages (from jinja2->outlines==0.0.34->vllm==0.4.2+cu124) (2.1.5)

Requirement already satisfied: jsonschema-specifications>=2023.03.6 in /usr/local/lib/python3.10/dist-packages (from jsonschema->outlines==0.0.34->vllm==0.4.2+cu124) (2023.12.1)

Requirement already satisfied: rpds-py>=0.7.1 in /usr/local/lib/python3.10/dist-packages (from jsonschema->outlines==0.0.34->vllm==0.4.2+cu124) (0.18.0)

Requirement already satisfied: llvmlite<0.43,>=0.42.0dev0 in /usr/local/lib/python3.10/dist-packages (from numba->outlines==0.0.34->vllm==0.4.2+cu124) (0.42.0)

Requirement already satisfied: mpmath>=0.19 in /usr/local/lib/python3.10/dist-packages (from sympy->torch==2.3.0->vllm==0.4.2+cu124) (1.3.0)

Installing collected packages: vllmAttempting uninstall: vllmFound existing installation: vllm 0.4.2+cu124Uninstalling vllm-0.4.2+cu124:Successfully uninstalled vllm-0.4.2+cu124Running setup.py develop for vllmSuccessfully installed vllm-0.4.2+cu124

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

vscode建立连接容器并建立python调试环境

相当于使用vscode直接连接到容器环境中。具体过程可以参见

07-09 周二 镜像启动容器添加openssh,使用vscode断点调试Python工程

上述笔记详细的记录了容器启动,openssh-server的安装以及配置和sshd服务的启动,

05-16 周四 vscode 搭建远程调试环境_python vscode server 远程调试-CSDN博客

总结

深度学习的开发,一般一个组共享同一个基础环境,还是太复杂容易出问题了,所以准备一个容器,隔离开使用环境反而是一个不错的方式,能够减少问题出现的概率。

经过本文的实践,对于想要基于pip安装的仓库中继续进行开发而搭建,需要的基本步骤如下:

- git clone或者其他方式获取源码

- 下载依赖

- 源码编译

经过源码编译之后,程序就可以顺利的进行断点调试了。在行文撰写过程中,为了使用vscode连接容器,也撰写了07-09 周二 镜像启动容器添加openssh,使用vscode断点调试Python工程

![[CTF]-PWN:House of Cat堆题型综合解析](https://i-blog.csdnimg.cn/direct/d8261e64113447bc994f24c1694f1b3d.png)