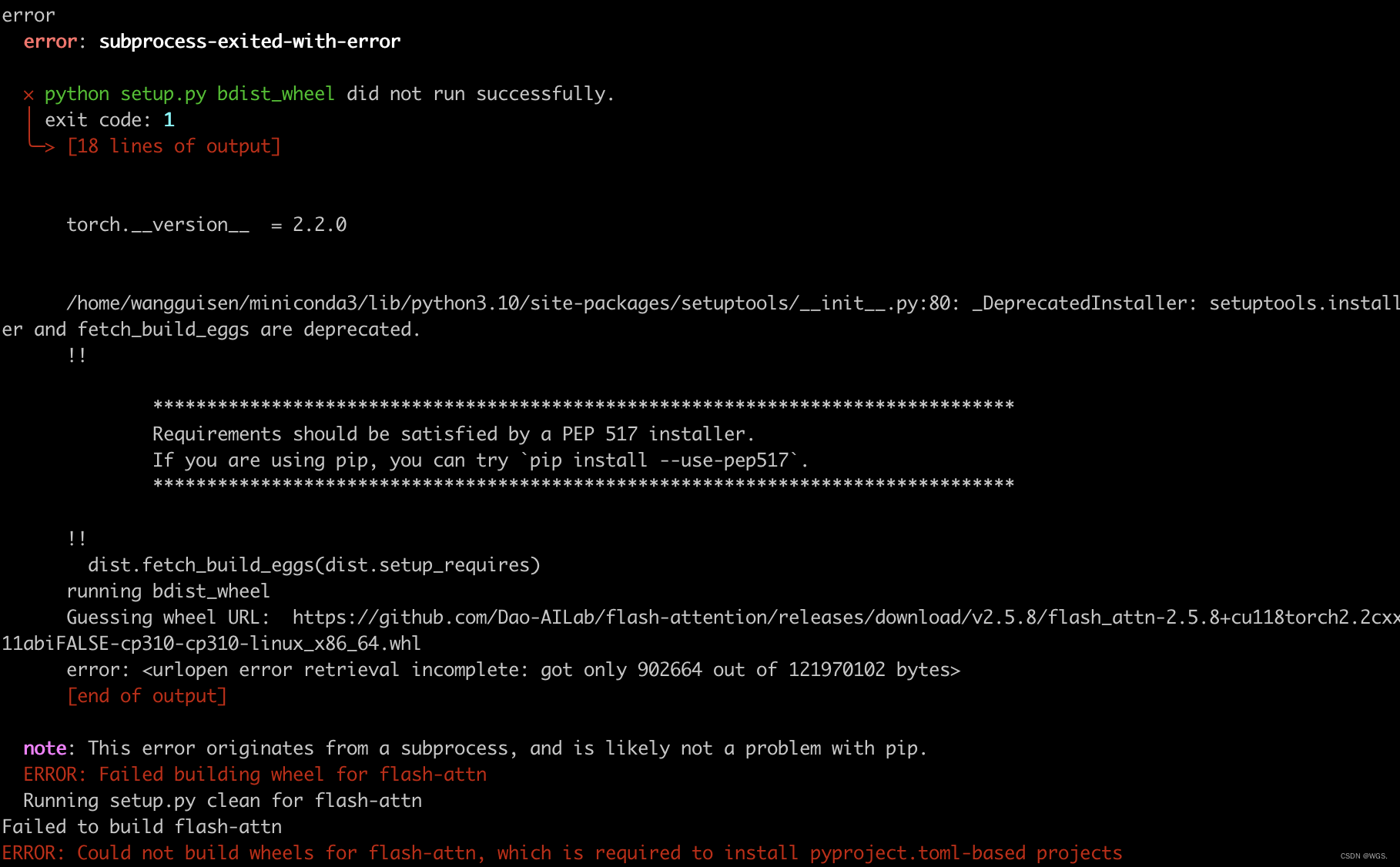

安装 FlashAttention 的时候遇到报错:

Failed to build flash-attn

ERROR: Could not build wheels for flash-attn, which is required to install pyproject.toml-based projects

可能是安装的版本与环境存在冲突吧,我的环境是:

- python 3.10

- cuda 11.8

- torch 2.2

去 releases 里找符合版本的,然后安装

pip install flash_attn-2.5.7+cu118torch2.2cxx11abiFALSE-cp310-cp310-linux_x86_64.whl --no-build-isolation

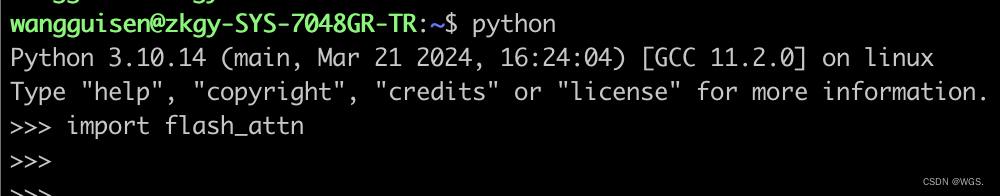

安装成功:

...

Installing collected packages: flash-attn

Successfully installed flash-attn-2.5.7

https://github.com/Dao-AILab/flash-attention

https://github.com/Dao-AILab/flash-attention/releases/

https://github.com/Dao-AILab/flash-attention/issues/224