Llama3-Tutorial之手把手带你评测Llama3能力(OpenCompass 版)

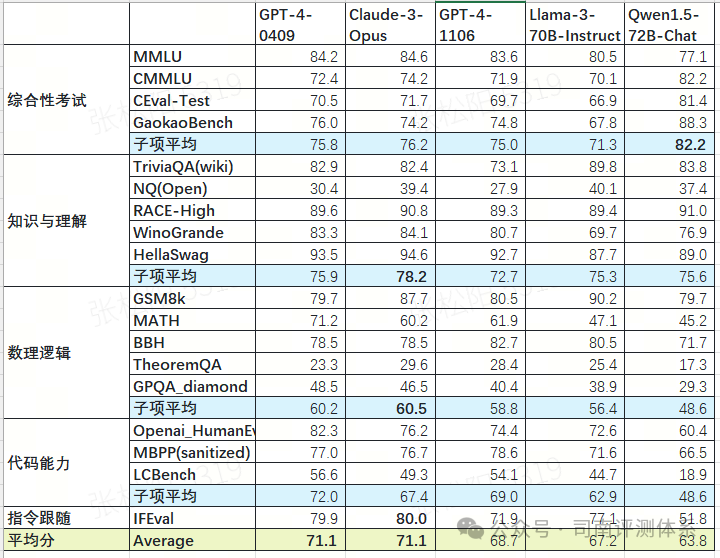

Llama 3 近期重磅发布,发布了 8B 和 70B 参数量的模型,opencompass团队对 Llama 3 进行了评测!

书生·浦语和机智流社区同学投稿了 OpenCompass 评测 Llama 3,欢迎 Star。

https://github.com/open-compass/OpenCompass/

https://github.com/SmartFlowAI/Llama3-Tutorial

1. oepncompass评测实战

本小节将带大家手把手用opencompass评测 Llama3。

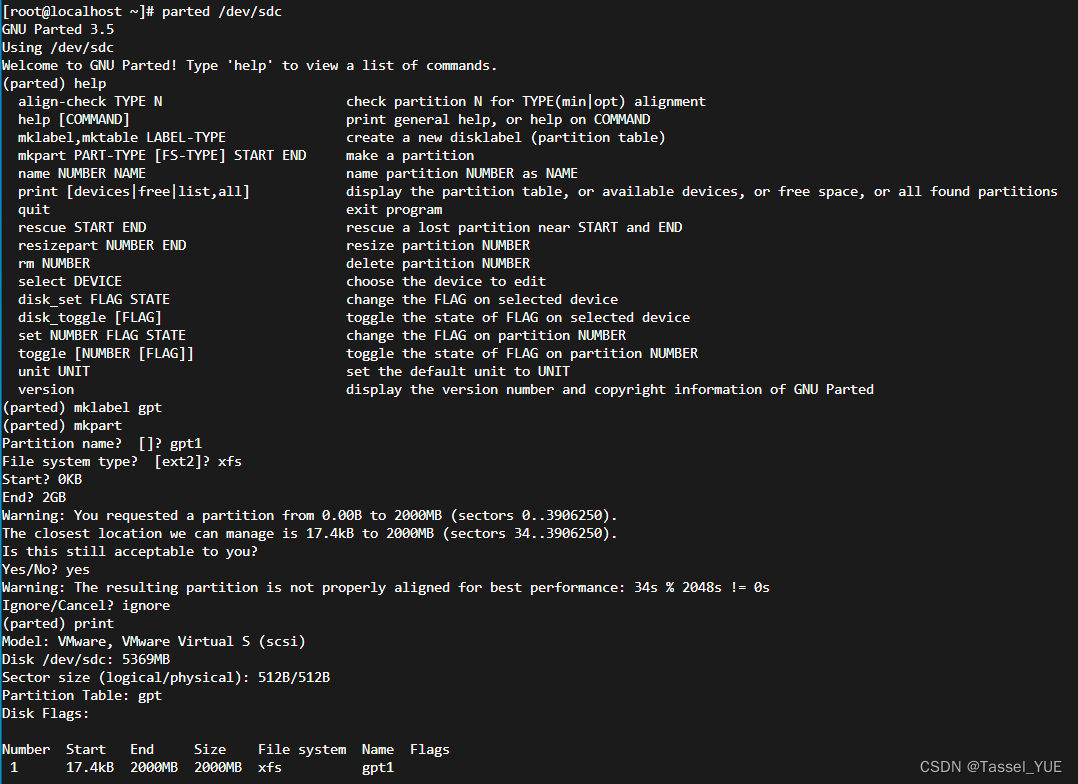

1.1 环境配置

conda create -n llama3 python=3.10

conda activate llama3

conda install git

apt install git-lfs

1.2 下载 Llama3 模型

首先通过 OpenXLab 下载 Llama-3-8B-Instruct 这个模型。

mkdir -p ~/model

cd ~/model

git clone https://code.openxlab.org.cn/MrCat/Llama-3-8B-Instruct.git Meta-Llama-3-8B-Instruct

或者软链接 InternStudio 中的模型

ln -s /root/share/new_models/meta-llama/Meta-Llama-3-8B-Instruct \

~/model

1.3 安装OpenCompass

cd ~

git clone https://github.com/open-compass/opencompass opencompass

cd opencompass

pip install -e .

1.4 数据准备

# 下载数据集到 data/ 处

wget https://github.com/open-compass/opencompass/releases/download/0.2.2.rc1/OpenCompassData-core-20240207.zip

unzip OpenCompassData-core-20240207.zip

# 此时环境当前目录文件如下

(llama3) root@intern-studio-50014188:~/opencompass# ls

LICENSE README.md configs docs opencompass.egg-info requirements.txt setup.py tools

OpenCompassData-core-20240207.zip README_zh-CN.md data opencompass requirements run.py tests

1.5 命令行评测

1.5.1 查看配置文件和支持的数据集名称

OpenCompass预定义了许多模型和数据集的配置,你可以通过工具列出所有可用的模型和数据集配置。

# 列出所有配置

python tools/list_configs.py

# 列出所有跟 llama (模型)及 ceval(数据集)相关的配置

python tools/list_configs.py llama ceval

+----------------------------+-------------------------------------------------------+

| Model | Config Path |

|----------------------------+-------------------------------------------------------|

| accessory_llama2_7b | configs/models/accessory/accessory_llama2_7b.py |

| hf_codellama_13b | configs/models/codellama/hf_codellama_13b.py |

| hf_codellama_13b_instruct | configs/models/codellama/hf_codellama_13b_instruct.py |

| hf_codellama_13b_python | configs/models/codellama/hf_codellama_13b_python.py |

| hf_codellama_34b | configs/models/codellama/hf_codellama_34b.py |

| hf_codellama_34b_instruct | configs/models/codellama/hf_codellama_34b_instruct.py |

| hf_codellama_34b_python | configs/models/codellama/hf_codellama_34b_python.py |

| hf_codellama_7b | configs/models/codellama/hf_codellama_7b.py |

| hf_codellama_7b_instruct | configs/models/codellama/hf_codellama_7b_instruct.py |

| hf_codellama_7b_python | configs/models/codellama/hf_codellama_7b_python.py |

| hf_gsm8k_rft_llama7b2_u13b | configs/models/others/hf_gsm8k_rft_llama7b2_u13b.py |

| hf_llama2_13b | configs/models/hf_llama/hf_llama2_13b.py |

| hf_llama2_13b_chat | configs/models/hf_llama/hf_llama2_13b_chat.py |

| hf_llama2_70b | configs/models/hf_llama/hf_llama2_70b.py |

| hf_llama2_70b_chat | configs/models/hf_llama/hf_llama2_70b_chat.py |

| hf_llama2_7b | configs/models/hf_llama/hf_llama2_7b.py |

| hf_llama2_7b_chat | configs/models/hf_llama/hf_llama2_7b_chat.py |

| hf_llama3_70b | configs/models/hf_llama/hf_llama3_70b.py |

| hf_llama3_70b_instruct | configs/models/hf_llama/hf_llama3_70b_instruct.py |

| hf_llama3_8b | configs/models/hf_llama/hf_llama3_8b.py |

| hf_llama3_8b_instruct | configs/models/hf_llama/hf_llama3_8b_instruct.py |

| hf_llama_13b | configs/models/hf_llama/hf_llama_13b.py |

| hf_llama_30b | configs/models/hf_llama/hf_llama_30b.py |

| hf_llama_65b | configs/models/hf_llama/hf_llama_65b.py |

| hf_llama_7b | configs/models/hf_llama/hf_llama_7b.py |

| llama2_13b | configs/models/llama/llama2_13b.py |

| llama2_13b_chat | configs/models/llama/llama2_13b_chat.py |

| llama2_70b | configs/models/llama/llama2_70b.py |

| llama2_70b_chat | configs/models/llama/llama2_70b_chat.py |

| llama2_7b | configs/models/llama/llama2_7b.py |

| llama2_7b_chat | configs/models/llama/llama2_7b_chat.py |

| llama_13b | configs/models/llama/llama_13b.py |

| llama_30b | configs/models/llama/llama_30b.py |

| llama_65b | configs/models/llama/llama_65b.py |

| llama_7b | configs/models/llama/llama_7b.py |

+----------------------------+-------------------------------------------------------+

+--------------------------------+------------------------------------------------------------------+

| Dataset | Config Path |

|--------------------------------+------------------------------------------------------------------|

| base_medium_llama | configs/datasets/collections/base_medium_llama.py |

| ceval_clean_ppl | configs/datasets/ceval/ceval_clean_ppl.py |

| ceval_contamination_ppl_810ec6 | configs/datasets/contamination/ceval_contamination_ppl_810ec6.py |

| ceval_gen | configs/datasets/ceval/ceval_gen.py |

| ceval_gen_2daf24 | configs/datasets/ceval/ceval_gen_2daf24.py |

| ceval_gen_5f30c7 | configs/datasets/ceval/ceval_gen_5f30c7.py |

| ceval_internal_ppl_1cd8bf | configs/datasets/ceval/ceval_internal_ppl_1cd8bf.py |

| ceval_ppl | configs/datasets/ceval/ceval_ppl.py |

| ceval_ppl_1cd8bf | configs/datasets/ceval/ceval_ppl_1cd8bf.py |

| ceval_ppl_578f8d | configs/datasets/ceval/ceval_ppl_578f8d.py |

| ceval_ppl_93e5ce | configs/datasets/ceval/ceval_ppl_93e5ce.py |

| ceval_zero_shot_gen_bd40ef | configs/datasets/ceval/ceval_zero_shot_gen_bd40ef.py |

+--------------------------------+------------------------------------------------------------------+

1.5.2 以 C-Eval_gen 为例

python run.py --datasets ceval_gen --hf-path /root/model/Meta-Llama-3-8B-Instruct --tokenizer-path /root/model/Meta-Llama-3-8B-Instruct --tokenizer-kwargs padding_side='left' truncation='left' trust_remote_code=True --model-kwargs trust_remote_code=True device_map='auto' --max-seq-len 2048 --max-out-len 16 --batch-size 4 --num-gpus 1 --debug

运行错误:

遇到错误执行如下安装和设置:

pip install -r requirements.txt

pip install protobuf

export MKL_SERVICE_FORCE_INTEL=1

export MKL_THREADING_LAYER=GNU

遇到 ModuleNotFoundError: No module named 'rouge' 错误请运行:

git clone https://github.com/pltrdy/rouge

cd rouge

python setup.py install

命令解析

python run.py \

--datasets ceval_gen \ # 使用 ceval数据集进行评测

--hf-path /root/model/Meta-Llama-3-8B-Instruct \ # HuggingFace 模型路径

--tokenizer-path /root/model/Meta-Llama-3-8B-Instruct \ # HuggingFace tokenizer 路径(如果与模型路径相同,可以省略)

--tokenizer-kwargs padding_side='left' truncation='left' trust_remote_code=True \ # 构建 tokenizer 的参数

--model-kwargs device_map='auto' trust_remote_code=True \ # 构建模型的参数

--max-seq-len 2048 \ # 模型可以接受的最大序列长度

--max-out-len 16 \ # 生成的最大 token 数

--batch-size 4 \ # 批量大小

--num-gpus 1 \ # 运行模型所需的 GPU 数量

--debug

评测时间约为15min左右。评测完成后输出结果如下:

dataset version metric mode opencompass.models.huggingface.HuggingFace_meta-llama_Meta-Llama-3-8B-Instruct

---------------------------------------------- --------- ------------- ------ --------------------------------------------------------------------------------

ceval-computer_network db9ce2 accuracy gen 63.16

ceval-operating_system 1c2571 accuracy gen 63.16

ceval-computer_architecture a74dad accuracy gen 52.38

ceval-college_programming 4ca32a accuracy gen 62.16

ceval-college_physics 963fa8 accuracy gen 42.11

ceval-college_chemistry e78857 accuracy gen 29.17

ceval-advanced_mathematics ce03e2 accuracy gen 42.11

ceval-probability_and_statistics 65e812 accuracy gen 27.78

ceval-discrete_mathematics e894ae accuracy gen 25

ceval-electrical_engineer ae42b9 accuracy gen 32.43

ceval-metrology_engineer ee34ea accuracy gen 62.5

ceval-high_school_mathematics 1dc5bf accuracy gen 5.56

ceval-high_school_physics adf25f accuracy gen 26.32

ceval-high_school_chemistry 2ed27f accuracy gen 63.16

ceval-high_school_biology 8e2b9a accuracy gen 36.84

ceval-middle_school_mathematics bee8d5 accuracy gen 31.58

ceval-middle_school_biology 86817c accuracy gen 71.43

ceval-middle_school_physics 8accf6 accuracy gen 57.89

ceval-middle_school_chemistry 167a15 accuracy gen 80

ceval-veterinary_medicine b4e08d accuracy gen 52.17

ceval-college_economics f3f4e6 accuracy gen 45.45

ceval-business_administration c1614e accuracy gen 30.3

ceval-marxism cf874c accuracy gen 47.37

ceval-mao_zedong_thought 51c7a4 accuracy gen 50

ceval-education_science 591fee accuracy gen 51.72

ceval-teacher_qualification 4e4ced accuracy gen 72.73

ceval-high_school_politics 5c0de2 accuracy gen 68.42

ceval-high_school_geography 865461 accuracy gen 42.11

ceval-middle_school_politics 5be3e7 accuracy gen 57.14

ceval-middle_school_geography 8a63be accuracy gen 50

ceval-modern_chinese_history fc01af accuracy gen 52.17

ceval-ideological_and_moral_cultivation a2aa4a accuracy gen 78.95

ceval-logic f5b022 accuracy gen 40.91

ceval-law a110a1 accuracy gen 33.33

ceval-chinese_language_and_literature 0f8b68 accuracy gen 34.78

ceval-art_studies 2a1300 accuracy gen 54.55

ceval-professional_tour_guide 4e673e accuracy gen 55.17

ceval-legal_professional ce8787 accuracy gen 30.43

ceval-high_school_chinese 315705 accuracy gen 31.58

ceval-high_school_history 7eb30a accuracy gen 65

ceval-middle_school_history 48ab4a accuracy gen 59.09

ceval-civil_servant 87d061 accuracy gen 34.04

ceval-sports_science 70f27b accuracy gen 63.16

ceval-plant_protection 8941f9 accuracy gen 68.18

ceval-basic_medicine c409d6 accuracy gen 57.89

ceval-clinical_medicine 49e82d accuracy gen 54.55

ceval-urban_and_rural_planner 95b885 accuracy gen 52.17

ceval-accountant 002837 accuracy gen 44.9

ceval-fire_engineer bc23f5 accuracy gen 38.71

ceval-environmental_impact_assessment_engineer c64e2d accuracy gen 45.16

ceval-tax_accountant 3a5e3c accuracy gen 34.69

ceval-physician 6e277d accuracy gen 57.14

ceval-stem - naive_average gen 46.34

ceval-social-science - naive_average gen 51.52

ceval-humanities - naive_average gen 48.72

ceval-other - naive_average gen 50.05

ceval-hard - naive_average gen 32.65

ceval - naive_average gen 48.63

05/06 16:34:08 - OpenCompass - INFO - write summary to /root/opencompass/outputs/default/20240506_162314/summary/summary_20240506_162314.txt

05/06 16:34:08 - OpenCompass - INFO - write csv to /root/opencompass/outputs/default/20240506_162314/summary/summary_20240506_162314.csv

路径/root/opencompass/outputs/default下存放了评测结果的汇总。

1.6 使用python脚本评测

在 configs文件夹下添加测试脚本 eval_llama3_8b_demo.py,内容如下:

from mmengine.config import read_base

with read_base():

from .datasets.mmlu.mmlu_gen_4d595a import mmlu_datasets

datasets = [*mmlu_datasets]

from opencompass.models import HuggingFaceCausalLM

models = [

dict(

type=HuggingFaceCausalLM,

abbr='Llama3_8b', # 运行完结果展示的名称

path='/root/model/Meta-Llama-3-8B-Instruct', # 模型路径

tokenizer_path='/root/model/Meta-Llama-3-8B-Instruct', # 分词器路径

model_kwargs=dict(

device_map='auto',

trust_remote_code=True

),

tokenizer_kwargs=dict(

padding_side='left',

truncation_side='left',

trust_remote_code=True,

use_fast=False

),

generation_kwargs={"eos_token_id": [128001, 128009]},

batch_padding=True,

max_out_len=100,

max_seq_len=2048,

batch_size=16,

run_cfg=dict(num_gpus=1),

)

]

运行python run.py configs/eval_llama3_8b_demo.py --debug

遇到如下报错,测试结果无数据。待排查原因:

2. opencompass官方仓库及评测结果

opencompass官方已经支持 Llama3。

仓库:

https://github.com/open-compass/opencompass/

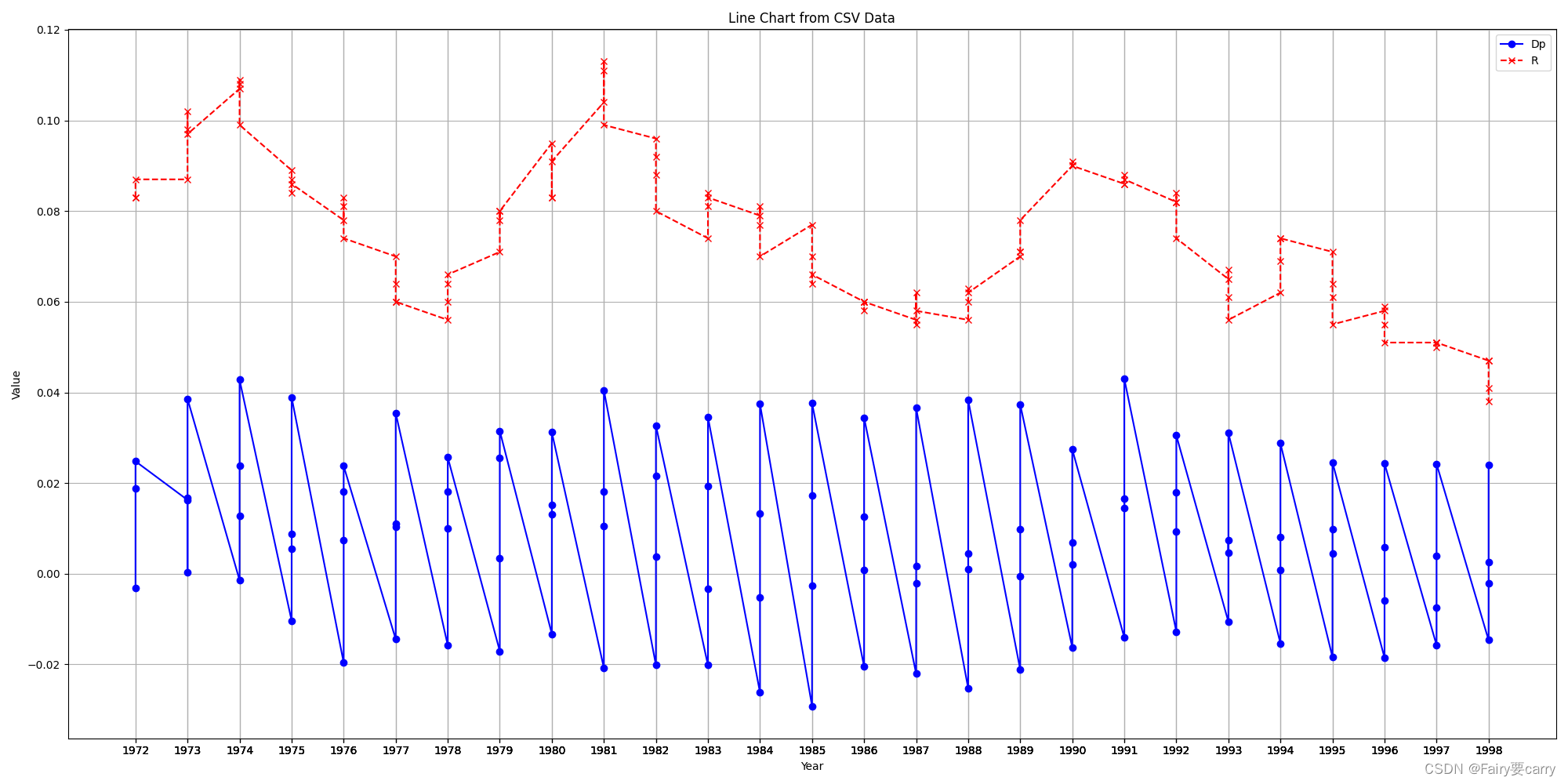

评测结果:

本文由 mdnice 多平台发布

![贪心问题 难度[普及-]一赏](https://img-blog.csdnimg.cn/direct/6887678550a244e6831753ff58311647.png)

![[1726]java试飞任务规划管理系统Myeclipse开发mysql数据库web结构java编程计算机网页项目](https://img-blog.csdnimg.cn/direct/baf410b1909d49f28c6097babe8093e7.png)