一、本文介绍

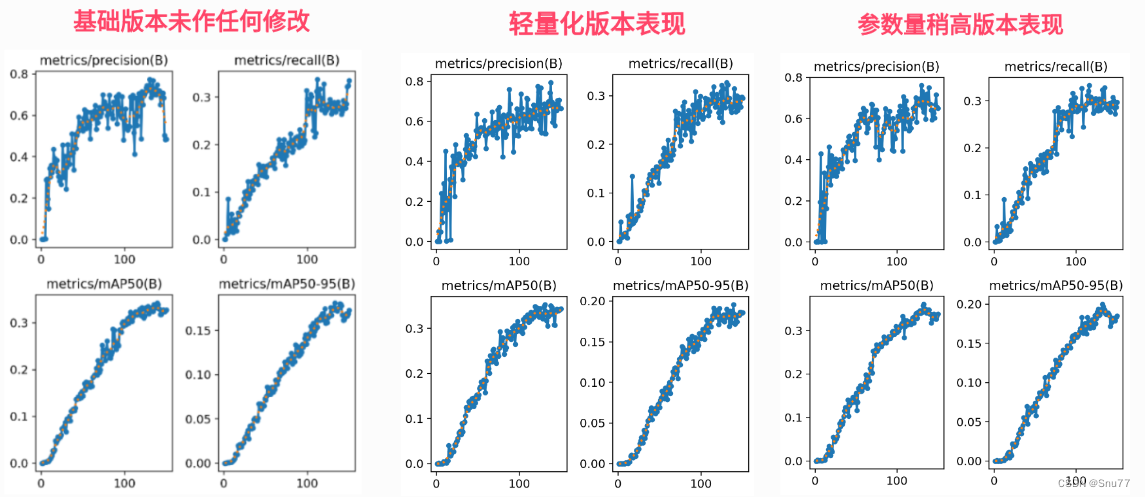

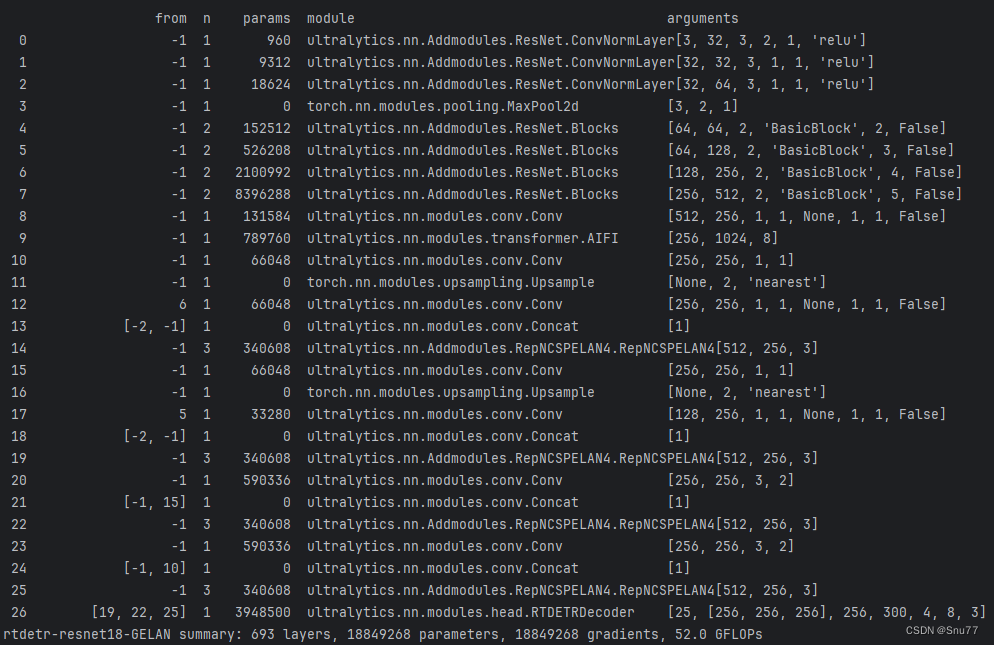

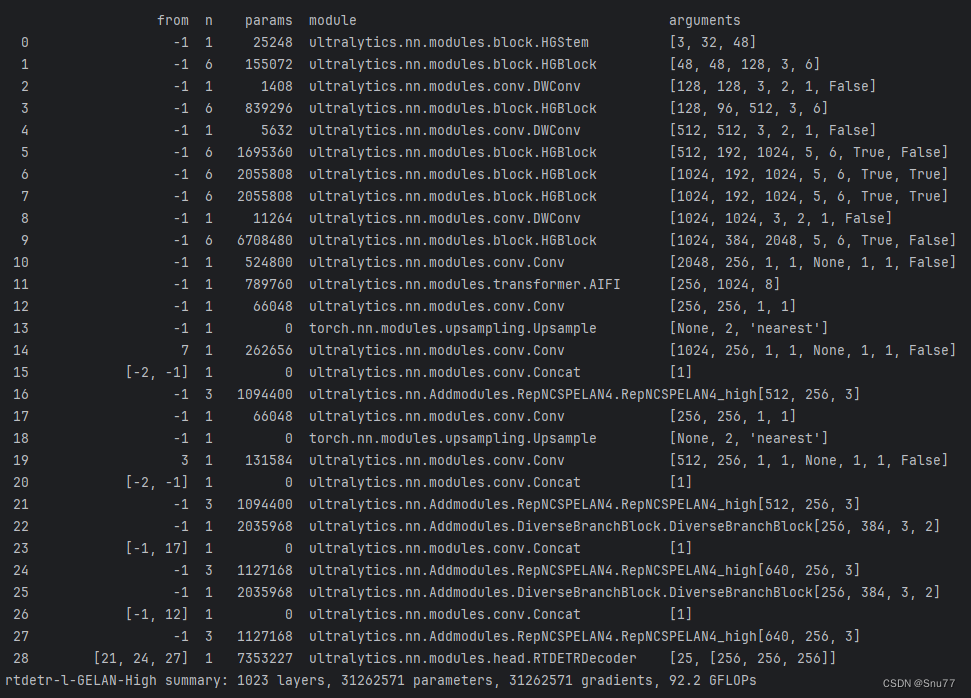

本文给大家带来的改进机制是利用2024/02/21号最新发布的YOLOv9其中提出的GELAN模块来改进RT-DETR的RepC3结构,GELAN融合了CSPNet和ELAN机制同时其中利用到了RepConv在获取更多有效特征的同时在推理时专用单分支结构从而不影响推理速度,同时本文的内容提供了两种版本一种是参数量更低涨点效果略微弱一些的版本(参数量HGNet-l版本下降850w参数,计算量为77GFLOPs),另一种是参数量稍多一些但是效果要不参数量低的效果要好一些(均为我个人整理),提供两种版本是为了适配不同需求的读者,具体选择那种大家可以根据自身的需求来选择即可,文章中我都均已提供,同时本文的结构存在大量的二次创新机会,后面我也会提供。

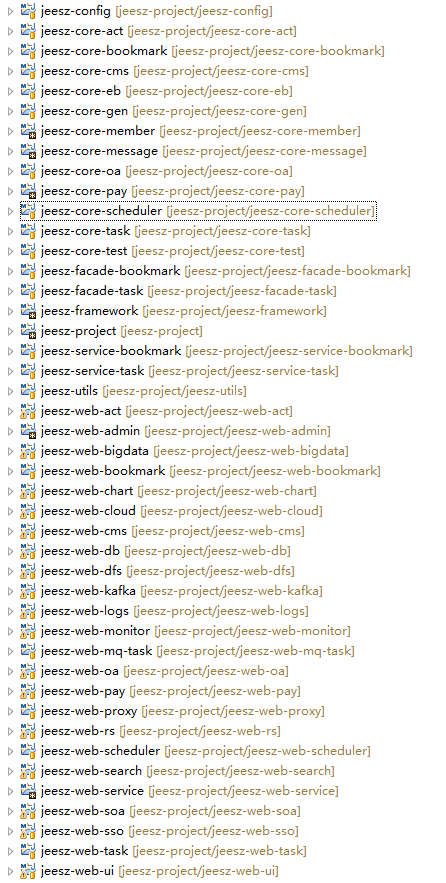

专栏目录: RT-DETR改进有效系列目录 | 包含卷积、主干、RepC3、注意力机制、Neck上百种创新机制

专栏链接:RT-DETR剑指论文专栏,持续复现各种顶会内容——论文收割机RT-DETR

二、GELAN的原理

2.1 Generalized ELAN

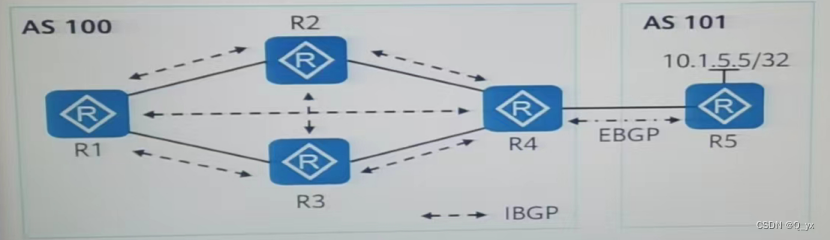

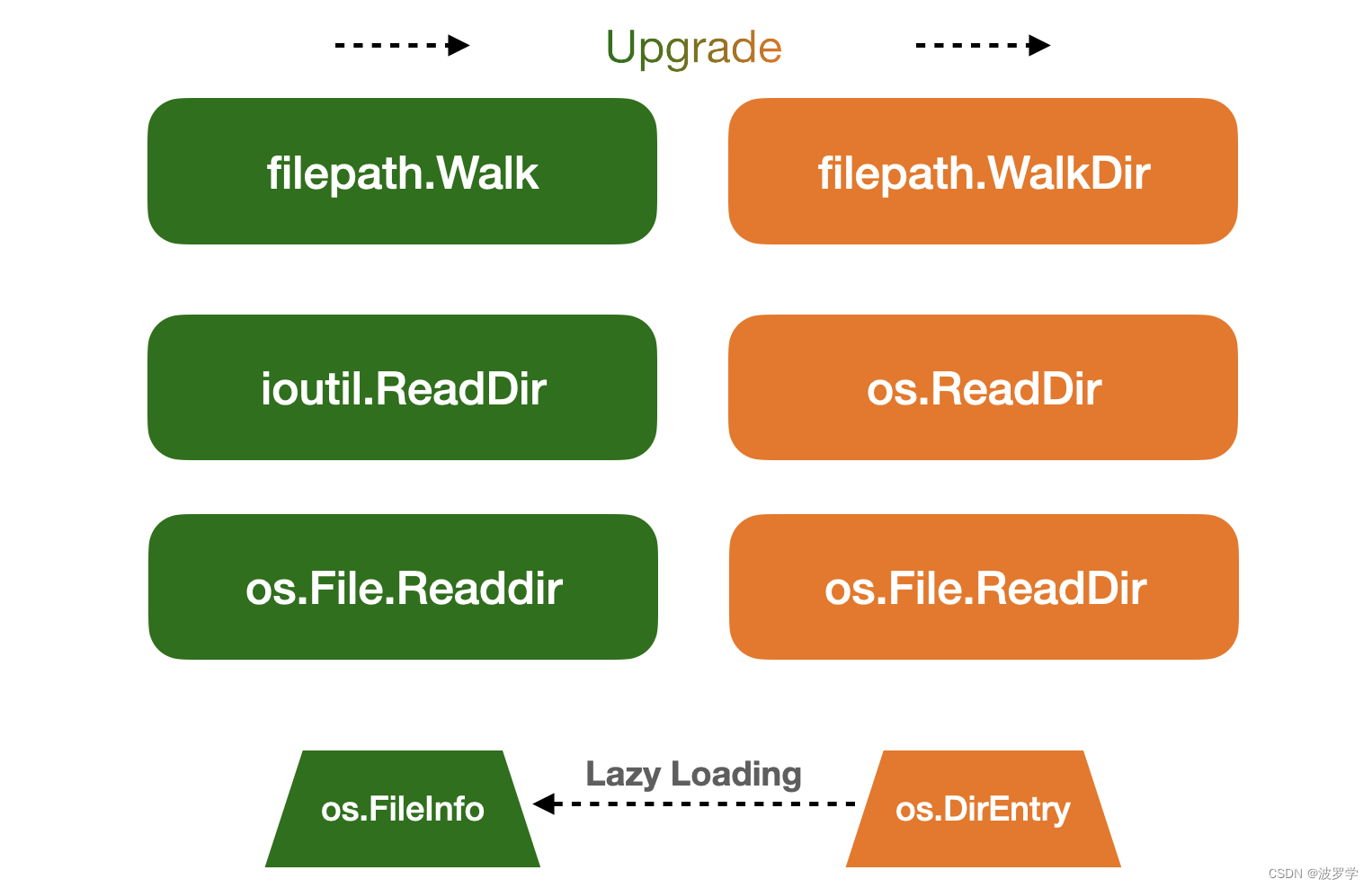

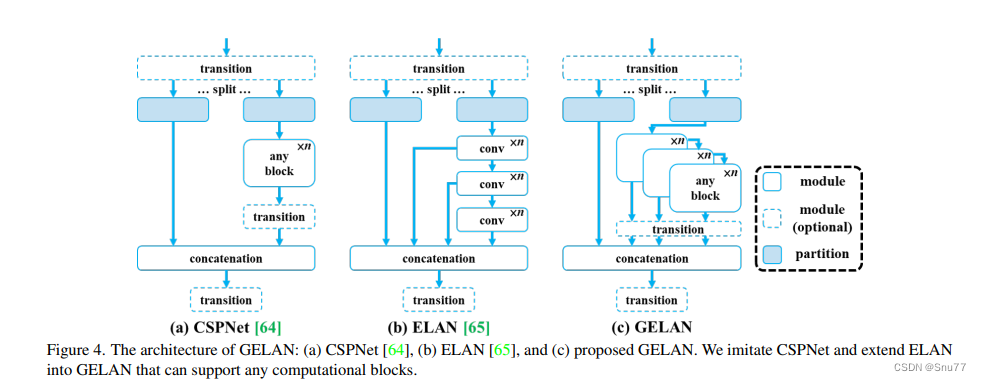

在本节中,我们描述了提出的新网络架构 - GELAN。通过结合两种神经网络架构CSPNet和ELAN,这两种架构都是以梯度路径规划设计的,我们设计了考虑了轻量级、推理速度和准确性的广义高效层聚合网络(GELAN)。其整体架构如图4所示。我们推广了ELAN的能力,ELAN原本只使用卷积层的堆叠,到一个新的架构,可以使用任何计算块。

这张图(图4)展示了广义高效层聚合网络(GELAN)的架构,以及它是如何从CSPNet和ELAN这两种神经网络架构演变而来的。这两种架构都设计有梯度路径规划。

a) CSPNet:在CSPNet的架构中,输入通过一个转换层被分割为两部分,然后分别通过任意的计算块。之后,这些分支被重新合并(通过concatenation),并再次通过转换层。

b) ELAN:与CSPNet相比,ELAN采用了堆叠的卷积层,其中每一层的输出都会与下一层的输入相结合,再经过卷积处理。

c) GELAN:结合了CSPNet和ELAN的设计,提出了GELAN。它采用了CSPNet的分割和重组的概念,并在每一部分引入了ELAN的层级卷积处理方式。不同之处在于GELAN不仅使用卷积层,还可以使用任何计算块,使得网络更加灵活,能够根据不同的应用需求定制。

GELAN的设计考虑到了轻量化、推理速度和精确度,以此来提高模型的整体性能。图中显示的模块和分区的可选性进一步增加了网络的适应性和可定制性。GELAN的这种结构允许它支持多种类型的计算块,这使得它可以更好地适应各种不同的计算需求和硬件约束。

总的来说,GELAN的架构是为了提供一个更加通用和高效的网络,可以适应从轻量级到复杂的深度学习任务,同时保持或增强计算效率和性能。通过这种方式,GELAN旨在解决现有架构的限制,提供一个可扩展的解决方案,以适应未来深度学习的发展。

大家看图片一眼就能看出来它融合了什么,就是将CSPHet的anyBlock模块堆叠的方式和ELAN融合到了一起。

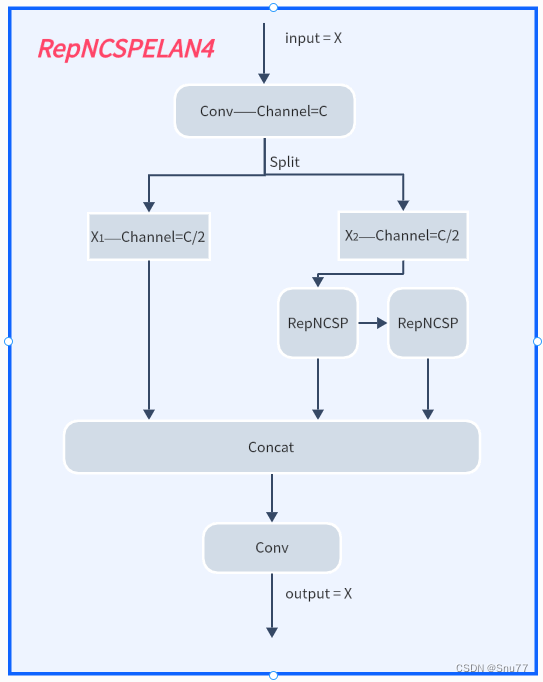

2.2 Generalized ELAN结构图

YOLOv9最主要的创新目前能够得到的就是其中的GELAN结构,我也是分析其代码根据论文将其结构图绘画出来。

下面的文件为YOLOv9的yaml文件。可以看到的是其提出了一种结构名字RepNCSPELAN4,其中的结构图concat后的通道数我没有画是因为它有计算中间的参数的变量是根据个人设置来的。

其代码和结构图如下所示!

class RepNCSPELAN4(nn.Module):# csp-elandef __init__(self, c1, c2, c5=1): # c5 = repeatsuper().__init__()c3 = int(c2 / 2)c4 = int(c3 / 2)self.c = c3 // 2self.cv1 = Conv(c1, c3, 1, 1)self.cv2 = nn.Sequential(RepNCSP(c3 // 2, c4, c5), Conv(c4, c4, 3, 1))self.cv3 = nn.Sequential(RepNCSP(c4, c4, c5), Conv(c4, c4, 3, 1))self.cv4 = Conv(c3 + (2 * c4), c2, 1, 1)def forward(self, x):y = list(self.cv1(x).chunk(2, 1))y.extend((m(y[-1])) for m in [self.cv2, self.cv3])return self.cv4(torch.cat(y, 1))def forward_split(self, x):y = list(self.cv1(x).split((self.c, self.c), 1))y.extend(m(y[-1]) for m in [self.cv2, self.cv3])return self.cv4(torch.cat(y, 1))

三、核心代码

核心代码的使用方式看章节四!

import torch

import torch.nn as nn

import numpy as np__all__ = ['RepNCSPELAN4', 'RepNCSPELAN4_high']class RepConvN(nn.Module):"""RepConv is a basic rep-style block, including training and deploy statusThis code is based on https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py"""default_act = nn.SiLU() # default activationdef __init__(self, c1, c2, k=3, s=1, p=1, g=1, d=1, act=True, bn=False, deploy=False):super().__init__()assert k == 3 and p == 1self.g = gself.c1 = c1self.c2 = c2self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()self.bn = Noneself.conv1 = Conv(c1, c2, k, s, p=p, g=g, act=False)self.conv2 = Conv(c1, c2, 1, s, p=(p - k // 2), g=g, act=False)def forward_fuse(self, x):"""Forward process"""return self.act(self.conv(x))def forward(self, x):"""Forward process"""id_out = 0 if self.bn is None else self.bn(x)return self.act(self.conv1(x) + self.conv2(x) + id_out)def get_equivalent_kernel_bias(self):kernel3x3, bias3x3 = self._fuse_bn_tensor(self.conv1)kernel1x1, bias1x1 = self._fuse_bn_tensor(self.conv2)kernelid, biasid = self._fuse_bn_tensor(self.bn)return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasiddef _avg_to_3x3_tensor(self, avgp):channels = self.c1groups = self.gkernel_size = avgp.kernel_sizeinput_dim = channels // groupsk = torch.zeros((channels, input_dim, kernel_size, kernel_size))k[np.arange(channels), np.tile(np.arange(input_dim), groups), :, :] = 1.0 / kernel_size ** 2return kdef _pad_1x1_to_3x3_tensor(self, kernel1x1):if kernel1x1 is None:return 0else:return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])def _fuse_bn_tensor(self, branch):if branch is None:return 0, 0if isinstance(branch, Conv):kernel = branch.conv.weightrunning_mean = branch.bn.running_meanrunning_var = branch.bn.running_vargamma = branch.bn.weightbeta = branch.bn.biaseps = branch.bn.epselif isinstance(branch, nn.BatchNorm2d):if not hasattr(self, 'id_tensor'):input_dim = self.c1 // self.gkernel_value = np.zeros((self.c1, input_dim, 3, 3), dtype=np.float32)for i in range(self.c1):kernel_value[i, i % input_dim, 1, 1] = 1self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)kernel = self.id_tensorrunning_mean = branch.running_meanrunning_var = branch.running_vargamma = branch.weightbeta = branch.biaseps = branch.epsstd = (running_var + eps).sqrt()t = (gamma / std).reshape(-1, 1, 1, 1)return kernel * t, beta - running_mean * gamma / stddef fuse_convs(self):if hasattr(self, 'conv'):returnkernel, bias = self.get_equivalent_kernel_bias()self.conv = nn.Conv2d(in_channels=self.conv1.conv.in_channels,out_channels=self.conv1.conv.out_channels,kernel_size=self.conv1.conv.kernel_size,stride=self.conv1.conv.stride,padding=self.conv1.conv.padding,dilation=self.conv1.conv.dilation,groups=self.conv1.conv.groups,bias=True).requires_grad_(False)self.conv.weight.data = kernelself.conv.bias.data = biasfor para in self.parameters():para.detach_()self.__delattr__('conv1')self.__delattr__('conv2')if hasattr(self, 'nm'):self.__delattr__('nm')if hasattr(self, 'bn'):self.__delattr__('bn')if hasattr(self, 'id_tensor'):self.__delattr__('id_tensor')class RepNBottleneck(nn.Module):# Standard bottleneckdef __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5): # ch_in, ch_out, shortcut, kernels, groups, expandsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = RepConvN(c1, c_, k[0], 1)self.cv2 = Conv(c_, c2, k[1], 1, g=g)self.add = shortcut and c1 == c2def forward(self, x):return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))class RepNCSP(nn.Module):# CSP Bottleneck with 3 convolutionsdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(2 * c_, c2, 1) # optional act=FReLU(c2)self.m = nn.Sequential(*(RepNBottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))def forward(self, x):return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))def autopad(k, p=None, d=1): # kernel, padding, dilation# Pad to 'same' shape outputsif d > 1:k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-sizeif p is None:p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-padreturn pclass Conv(nn.Module):# Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)default_act = nn.SiLU() # default activationdef __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):super().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)self.bn = nn.BatchNorm2d(c2)self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()def forward(self, x):return self.act(self.bn(self.conv(x)))def forward_fuse(self, x):return self.act(self.conv(x))class RepNCSPELAN4(nn.Module):# csp-elandef __init__(self, c1, c2, c5=1): # c5 = repeatsuper().__init__()c3 = int(c2 / 2)c4 = int(c3 / 2)self.c = c3 // 2self.cv1 = Conv(c1, c3, 1, 1)self.cv2 = nn.Sequential(RepNCSP(c3 // 2, c4, c5), Conv(c4, c4, 3, 1))self.cv3 = nn.Sequential(RepNCSP(c4, c4, c5), Conv(c4, c4, 3, 1))self.cv4 = Conv(c3 + (2 * c4), c2, 1, 1)def forward(self, x):y = list(self.cv1(x).chunk(2, 1))y.extend((m(y[-1])) for m in [self.cv2, self.cv3])return self.cv4(torch.cat(y, 1))def forward_split(self, x):y = list(self.cv1(x).split((self.c, self.c), 1))y.extend(m(y[-1]) for m in [self.cv2, self.cv3])return self.cv4(torch.cat(y, 1))class RepNCSPELAN4_high(nn.Module):# csp-elandef __init__(self, c1, c2, c5=1): # c5 = repeatsuper().__init__()c3 = c2c4 = int(c3 / 2)self.c = c3 // 2self.cv1 = Conv(c1, c3, 1, 1)self.cv2 = nn.Sequential(RepNCSP(c3 // 2, c4, c5), Conv(c4, c4, 3, 1))self.cv3 = nn.Sequential(RepNCSP(c4, c4, c5), Conv(c4, c4, 3, 1))self.cv4 = Conv(c3 + (2 * c4), c2, 1, 1)def forward(self, x):y = list(self.cv1(x).chunk(2, 1))y.extend((m(y[-1])) for m in [self.cv2, self.cv3])return self.cv4(torch.cat(y, 1))def forward_split(self, x):y = list(self.cv1(x).split((self.c, self.c), 1))y.extend(m(y[-1]) for m in [self.cv2, self.cv3])return self.cv4(torch.cat(y, 1))if __name__ == "__main__":# Generating Sample imageimage_size = (1, 24, 224, 224)image = torch.rand(*image_size)# Modelmobilenet_v1 = RepNCSPELAN4(24, 24, 128, 64, 1)out = mobilenet_v1(image)print(out.size())四、手把手教你添加GELAN机制

4.1 修改一

第一还是建立文件,我们找到如下ultralytics/nn/modules文件夹下建立一个目录名字呢就是'Addmodules'文件夹(用群内的文件的话已经有了无需新建)!然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可。

4.2 修改二

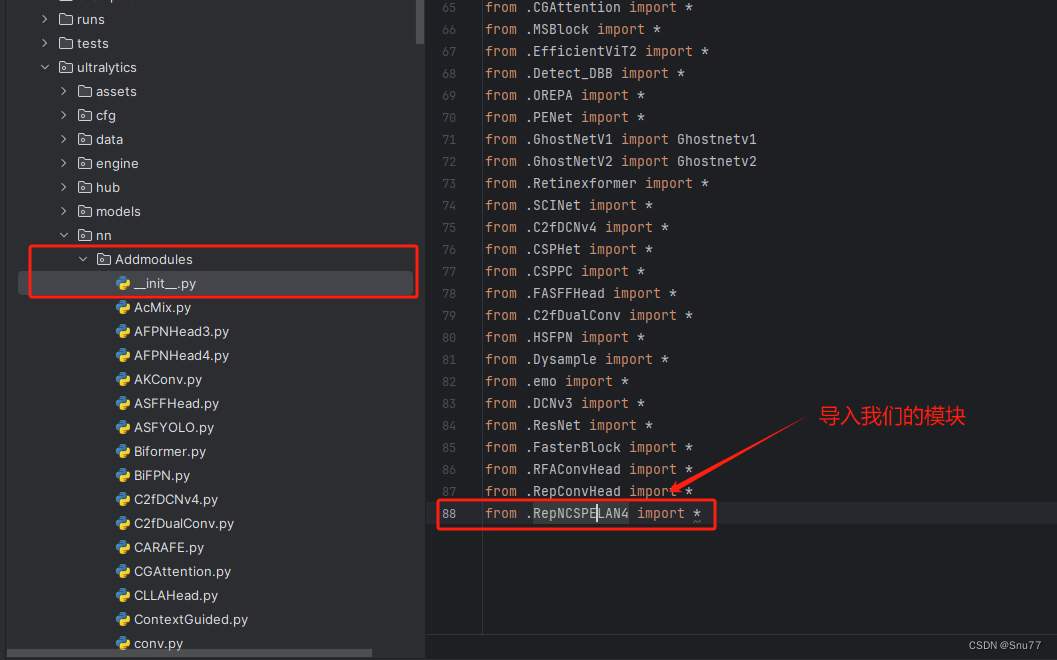

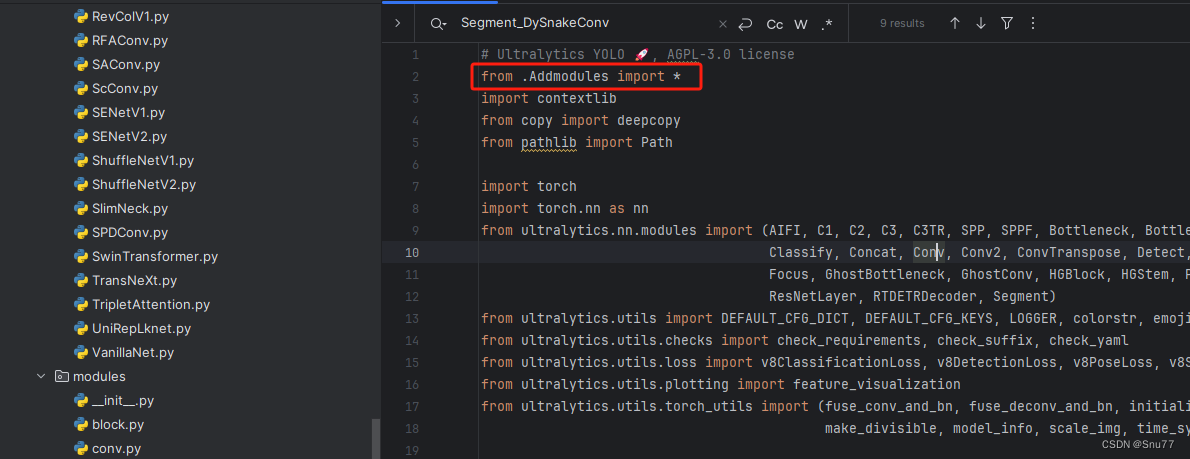

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'(用群内的文件的话已经有了无需新建),然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块(用群内的文件的话已经有了无需重新导入直接开始第四步即可)!

从今天开始以后的教程就都统一成这个样子了,因为我默认大家用了我群内的文件来进行修改!!

4.4 修改四

按照我的添加在parse_model里添加即可。

到此就修改完成了,大家可以复制下面的yaml文件运行。

五、GELAN的yaml文件和运行记录

5.1 GELAN低参数量版本的yaml文件

5.1.1 ResNet18版本

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1- [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1- [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2- [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2- [-1, 2, Blocks, [64, BasicBlock, 2, False]] # 4- [-1, 2, Blocks, [128, BasicBlock, 3, False]] # 5-P3- [-1, 2, Blocks, [256, BasicBlock, 4, False]] # 6-P4- [-1, 2, Blocks, [512, BasicBlock, 5, False]] # 7-P5head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 8 input_proj.2- [-1, 1, AIFI, [1024, 8]]- [-1, 1, Conv, [256, 1, 1]] # 10, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 11- [6, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 12 input_proj.1- [[-2, -1], 1, Concat, [1]]- [-1, 3, RepNCSPELAN4, [256]] # 14, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 15, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 16- [5, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 17 input_proj.0- [[-2, -1], 1, Concat, [1]] # 18 cat backbone P4- [-1, 3, RepNCSPELAN4, [256]] # X3 (19), fpn_blocks.1- [-1, 1, Conv, [256, 3, 2]] # 20, downsample_convs.0- [[-1, 15], 1, Concat, [1]] # 21 cat Y4- [-1, 3, RepNCSPELAN4, [256]] # F4 (22), pan_blocks.0- [-1, 1, Conv, [256, 3, 2]] # 23, downsample_convs.1- [[-1, 10], 1, Concat, [1]] # 24 cat Y5- [-1, 3, RepNCSPELAN4, [256]] # F5 (25), pan_blocks.1- [[19, 22, 25], 1, RTDETRDecoder, [nc, 256, 300, 4, 8, 3]] # Detect(P3, P4, P5)

5.1.2 ResNet50版本

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1- [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1- [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2- [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2- [-1, 3, Blocks, [64, BottleNeck, 2, False]] # 4- [-1, 4, Blocks, [128, BottleNeck, 3, False]] # 5-P3- [-1, 6, Blocks, [256, BottleNeck, 4, False]] # 6-P4- [-1, 3, Blocks, [512, BottleNeck, 5, False]] # 7-P5head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 8 input_proj.2- [-1, 1, AIFI, [1024, 8]] # 9- [-1, 1, Conv, [256, 1, 1]] # 10, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 11- [6, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 12 input_proj.1- [[-2, -1], 1, Concat, [1]] # 13- [-1, 3, RepNCSPELAN4, [256]] # 14, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 15, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 16- [5, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 17 input_proj.0- [[-2, -1], 1, Concat, [1]] # 18 cat backbone P4- [-1, 3, RepNCSPELAN4, [256]] # X3 (19), fpn_blocks.1- [-1, 1, Conv, [256, 3, 2]] # 20, downsample_convs.0- [[-1, 15], 1, Concat, [1]] # 21 cat Y4- [-1, 3, RepNCSPELAN4, [256]] # F4 (22), pan_blocks.0- [-1, 1, Conv, [256, 3, 2]] # 23, downsample_convs.1- [[-1, 10], 1, Concat, [1]] # 24 cat Y5- [-1, 3, RepNCSPELAN4, [256]] # F5 (25), pan_blocks.1- [[19, 22, 25], 1, RTDETRDecoder, [nc, 256, 300, 4, 8, 6]] # Detect(P3, P4, P5)

5.1.3 HGNetv2版本

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, HGStem, [32, 48]] # 0-P2/4- [-1, 6, HGBlock, [48, 128, 3]] # stage 1- [-1, 1, DWConv, [128, 3, 2, 1, False]] # 2-P3/8- [-1, 6, HGBlock, [96, 512, 3]] # stage 2- [-1, 1, DWConv, [512, 3, 2, 1, False]] # 4-P3/16- [-1, 6, HGBlock, [192, 1024, 5, True, False]] # cm, c2, k, light, shortcut- [-1, 6, HGBlock, [192, 1024, 5, True, True]]- [-1, 6, HGBlock, [192, 1024, 5, True, True]] # stage 3- [-1, 1, DWConv, [1024, 3, 2, 1, False]] # 8-P4/32- [-1, 6, HGBlock, [384, 2048, 5, True, False]] # stage 4head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 10 input_proj.2- [-1, 1, AIFI, [1024, 8]]- [-1, 1, Conv, [256, 1, 1]] # 12, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [7, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 14 input_proj.1- [[-2, -1], 1, Concat, [1]]- [-1, 3, RepNCSPELAN4, [256]] # 16, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 17, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [3, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 19 input_proj.0- [[-2, -1], 1, Concat, [1]] # cat backbone P4- [-1, 3, RepNCSPELAN4, [256]] # X3 (21), fpn_blocks.1- [-1, 1, DiverseBranchBlock, [384, 3, 2]] # 22, downsample_convs.0- [[-1, 17], 1, Concat, [1]] # cat Y4- [-1, 3, RepNCSPELAN4, [256]] # F4 (24), pan_blocks.0- [-1, 1, DiverseBranchBlock, [384, 3, 2]] # 25, downsample_convs.1- [[-1, 12], 1, Concat, [1]] # cat Y5- [-1, 3, RepNCSPELAN4, [256]] # F5 (27), pan_blocks.1- [[21, 24, 27], 1, RTDETRDecoder, [nc]] # Detect(P3, P4, P5)

5.2 GELAN高参数量版本的yaml文件

5.2.1 ResNet18版本

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4- [-1, 1, RepNCSPELAN4_high, [128]]- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8- [-1, 1, RepNCSPELAN4_high, [256]]- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16- [-1, 1, RepNCSPELAN4_high, [512]]- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32- [-1, 1, RepNCSPELAN4_high, [1024]]- [-1, 1, SPPF, [1024, 5]] # 9# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 6], 1, Concat, [1]] # cat backbone P4- [-1, 1, RepNCSPELAN4_high, [512]] # 12- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 4], 1, Concat, [1]] # cat backbone P3- [-1, 1, RepNCSPELAN4_high, [256]] # 15 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]]- [[-1, 12], 1, Concat, [1]] # cat head P4- [-1, 1, RepNCSPELAN4_high, [512]] # 18 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]]- [[-1, 9], 1, Concat, [1]] # cat head P5- [-1, 1, RepNCSPELAN4_high, [1024]] # 21 (P5/32-large)- [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.2.2 ResNet50版本

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, ConvNormLayer, [32, 3, 2, 1, 'relu']] # 0-P1- [-1, 1, ConvNormLayer, [32, 3, 1, 1, 'relu']] # 1- [-1, 1, ConvNormLayer, [64, 3, 1, 1, 'relu']] # 2- [-1, 1, nn.MaxPool2d, [3, 2, 1]] # 3-P2- [-1, 3, Blocks, [64, BottleNeck, 2, False]] # 4- [-1, 4, Blocks, [128, BottleNeck, 3, False]] # 5-P3- [-1, 6, Blocks, [256, BottleNeck, 4, False]] # 6-P4- [-1, 3, Blocks, [512, BottleNeck, 5, False]] # 7-P5head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 8 input_proj.2- [-1, 1, AIFI, [1024, 8]] # 9- [-1, 1, Conv, [256, 1, 1]] # 10, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 11- [6, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 12 input_proj.1- [[-2, -1], 1, Concat, [1]] # 13- [-1, 3, RepNCSPELAN4_high, [256]] # 14, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 15, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 16- [5, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 17 input_proj.0- [[-2, -1], 1, Concat, [1]] # 18 cat backbone P4- [-1, 3, RepNCSPELAN4_high, [256]] # X3 (19), fpn_blocks.1- [-1, 1, Conv, [256, 3, 2]] # 20, downsample_convs.0- [[-1, 15], 1, Concat, [1]] # 21 cat Y4- [-1, 3, RepNCSPELAN4_high, [256]] # F4 (22), pan_blocks.0- [-1, 1, Conv, [256, 3, 2]] # 23, downsample_convs.1- [[-1, 10], 1, Concat, [1]] # 24 cat Y5- [-1, 3, RepNCSPELAN4_high, [256]] # F5 (25), pan_blocks.1- [[19, 22, 25], 1, RTDETRDecoder, [nc, 256, 300, 4, 8, 6]] # Detect(P3, P4, P5)

5.2.3 HGNetv2版本

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, HGStem, [32, 48]] # 0-P2/4- [-1, 6, HGBlock, [48, 128, 3]] # stage 1- [-1, 1, DWConv, [128, 3, 2, 1, False]] # 2-P3/8- [-1, 6, HGBlock, [96, 512, 3]] # stage 2- [-1, 1, DWConv, [512, 3, 2, 1, False]] # 4-P3/16- [-1, 6, HGBlock, [192, 1024, 5, True, False]] # cm, c2, k, light, shortcut- [-1, 6, HGBlock, [192, 1024, 5, True, True]]- [-1, 6, HGBlock, [192, 1024, 5, True, True]] # stage 3- [-1, 1, DWConv, [1024, 3, 2, 1, False]] # 8-P4/32- [-1, 6, HGBlock, [384, 2048, 5, True, False]] # stage 4head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 10 input_proj.2- [-1, 1, AIFI, [1024, 8]]- [-1, 1, Conv, [256, 1, 1]] # 12, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [7, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 14 input_proj.1- [[-2, -1], 1, Concat, [1]]- [-1, 3, RepNCSPELAN4_high, [256]] # 16, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 17, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [3, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 19 input_proj.0- [[-2, -1], 1, Concat, [1]] # cat backbone P4- [-1, 3, RepNCSPELAN4_high, [256]] # X3 (21), fpn_blocks.1- [-1, 1, DiverseBranchBlock, [384, 3, 2]] # 22, downsample_convs.0- [[-1, 17], 1, Concat, [1]] # cat Y4- [-1, 3, RepNCSPELAN4_high, [256]] # F4 (24), pan_blocks.0- [-1, 1, DiverseBranchBlock, [384, 3, 2]] # 25, downsample_convs.1- [[-1, 12], 1, Concat, [1]] # cat Y5- [-1, 3, RepNCSPELAN4_high, [256]] # F5 (27), pan_blocks.1- [[21, 24, 27], 1, RTDETRDecoder, [nc]] # Detect(P3, P4, P5)

5.3 训练代码

大家可以创建一个py文件将我给的代码复制粘贴进去,配置好自己的文件路径即可运行。

import warnings

warnings.filterwarnings('ignore')

from ultralytics import YOLOif __name__ == '__main__':model = YOLO('ultralytics/cfg/models/v8/yolov8-C2f-FasterBlock.yaml')# model.load('yolov8n.pt') # loading pretrain weightsmodel.train(data=r'替换数据集yaml文件地址',# 如果大家任务是其它的'ultralytics/cfg/default.yaml'找到这里修改task可以改成detect, segment, classify, posecache=False,imgsz=640,epochs=150,single_cls=False, # 是否是单类别检测batch=4,close_mosaic=10,workers=0,device='0',optimizer='SGD', # using SGD# resume='', # 如过想续训就设置last.pt的地址amp=False, # 如果出现训练损失为Nan可以关闭ampproject='runs/train',name='exp',)5.3 GELAN的训练过程截图

5.3.1 低参数量版本

5.3.2 高参数量版本

五、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv8改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~

专栏链接:RT-DETR剑指论文专栏,持续复现各种顶会内容——论文收割机RT-DETR