- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

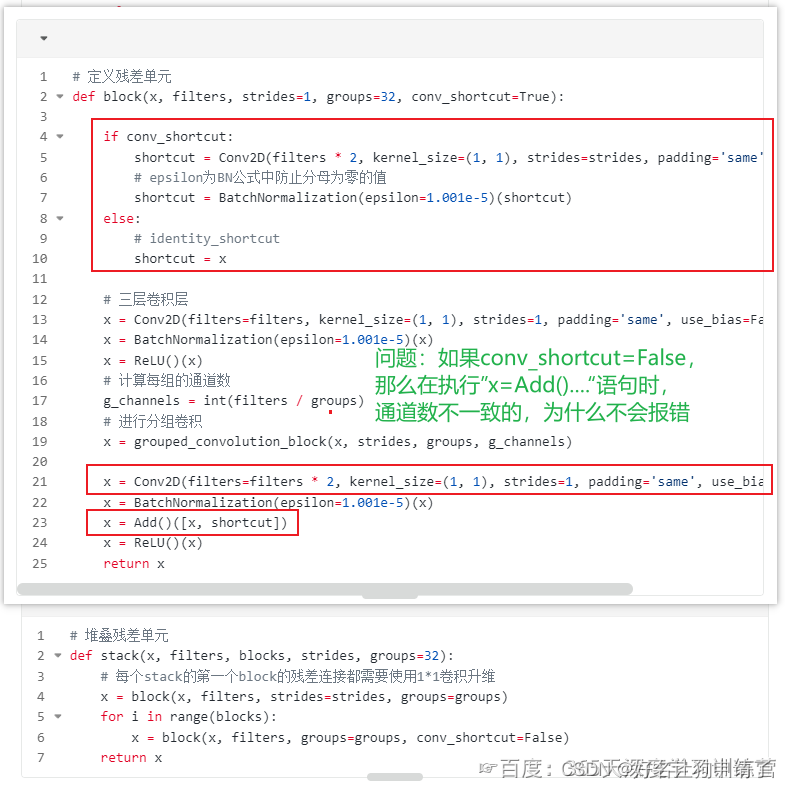

J6周有一段代码如下

思考过程

- 首先看到这个问题的描述,想到的是可能使用了向量操作的广播机制

- 然后就想想办法验证一下,想到直接把J6的tensorflow代码跑一遍

- 通过model.summary打印了模型的所有层的信息,并把信息处理成方便查看(去掉分组卷积的一大堆层)

- 发现通道数一致,并不是使用了广播机制

- 仔细分析模型的过程,得出解释

验证过程

summary直接打印的内容,(太大只能贴出部分)

Model: "model"

__________________________________________________________________________________________________Layer (type) Output Shape Param # Connected to

==================================================================================================input_4 (InputLayer) [(None, 224, 224, 3)] 0 [] zero_padding2d_6 (ZeroPadd (None, 230, 230, 3) 0 ['input_4[0][0]'] ing2D) conv2d_555 (Conv2D) (None, 112, 112, 64) 9472 ['zero_padding2d_6[0][0]'] batch_normalization_59 (Ba (None, 112, 112, 64) 256 ['conv2d_555[0][0]'] tchNormalization) re_lu_53 (ReLU) (None, 112, 112, 64) 0 ['batch_normalization_59[0][0]'] zero_padding2d_7 (ZeroPadd (None, 114, 114, 64) 0 ['re_lu_53[0][0]'] ing2D) max_pooling2d_3 (MaxPoolin (None, 56, 56, 64) 0 ['zero_padding2d_7[0][0]'] g2D) conv2d_557 (Conv2D) (None, 56, 56, 128) 8192 ['max_pooling2d_3[0][0]'] batch_normalization_61 (Ba (None, 56, 56, 128) 512 ['conv2d_557[0][0]'] tchNormalization) re_lu_54 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_61[0][0]'] lambda_514 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_515 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_516 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_517 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_518 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_519 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_520 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_521 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_522 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_523 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_524 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_525 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_526 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_527 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_528 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_529 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_530 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_531 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_532 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_533 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_534 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_535 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_536 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_537 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_538 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_539 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_540 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_541 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_542 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_543 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_544 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_545 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] conv2d_558 (Conv2D) (None, 56, 56, 4) 144 ['lambda_514[0][0]'] conv2d_559 (Conv2D) (None, 56, 56, 4) 144 ['lambda_515[0][0]'] conv2d_560 (Conv2D) (None, 56, 56, 4) 144 ['lambda_516[0][0]'] conv2d_561 (Conv2D) (None, 56, 56, 4) 144 ['lambda_517[0][0]'] conv2d_562 (Conv2D) (None, 56, 56, 4) 144 ['lambda_518[0][0]'] conv2d_563 (Conv2D) (None, 56, 56, 4) 144 ['lambda_519[0][0]'] conv2d_564 (Conv2D) (None, 56, 56, 4) 144 ['lambda_520[0][0]'] conv2d_565 (Conv2D) (None, 56, 56, 4) 144 ['lambda_521[0][0]'] conv2d_566 (Conv2D) (None, 56, 56, 4) 144 ['lambda_522[0][0]'] conv2d_567 (Conv2D) (None, 56, 56, 4) 144 ['lambda_523[0][0]'] conv2d_568 (Conv2D) (None, 56, 56, 4) 144 ['lambda_524[0][0]'] conv2d_569 (Conv2D) (None, 56, 56, 4) 144 ['lambda_525[0][0]'] conv2d_570 (Conv2D) (None, 56, 56, 4) 144 ['lambda_526[0][0]'] conv2d_571 (Conv2D) (None, 56, 56, 4) 144 ['lambda_527[0][0]'] conv2d_572 (Conv2D) (None, 56, 56, 4) 144 ['lambda_528[0][0]'] conv2d_573 (Conv2D) (None, 56, 56, 4) 144 ['lambda_529[0][0]'] conv2d_574 (Conv2D) (None, 56, 56, 4) 144 ['lambda_530[0][0]'] conv2d_575 (Conv2D) (None, 56, 56, 4) 144 ['lambda_531[0][0]'] conv2d_576 (Conv2D) (None, 56, 56, 4) 144 ['lambda_532[0][0]'] conv2d_577 (Conv2D) (None, 56, 56, 4) 144 ['lambda_533[0][0]'] conv2d_578 (Conv2D) (None, 56, 56, 4) 144 ['lambda_534[0][0]'] conv2d_579 (Conv2D) (None, 56, 56, 4) 144 ['lambda_535[0][0]'] conv2d_580 (Conv2D) (None, 56, 56, 4) 144 ['lambda_536[0][0]'] conv2d_581 (Conv2D) (None, 56, 56, 4) 144 ['lambda_537[0][0]'] conv2d_582 (Conv2D) (None, 56, 56, 4) 144 ['lambda_538[0][0]'] conv2d_583 (Conv2D) (None, 56, 56, 4) 144 ['lambda_539[0][0]'] conv2d_584 (Conv2D) (None, 56, 56, 4) 144 ['lambda_540[0][0]'] conv2d_585 (Conv2D) (None, 56, 56, 4) 144 ['lambda_541[0][0]'] conv2d_586 (Conv2D) (None, 56, 56, 4) 144 ['lambda_542[0][0]'] conv2d_587 (Conv2D) (None, 56, 56, 4) 144 ['lambda_543[0][0]'] conv2d_588 (Conv2D) (None, 56, 56, 4) 144 ['lambda_544[0][0]'] conv2d_589 (Conv2D) (None, 56, 56, 4) 144 ['lambda_545[0][0]'] concatenate_16 (Concatenat (None, 56, 56, 128) 0 ['conv2d_558[0][0]', e) 'conv2d_559[0][0]', 'conv2d_560[0][0]', 'conv2d_561[0][0]', 'conv2d_562[0][0]', 'conv2d_563[0][0]', 'conv2d_564[0][0]', 'conv2d_565[0][0]', 'conv2d_566[0][0]', 'conv2d_567[0][0]', 'conv2d_568[0][0]', 'conv2d_569[0][0]', 'conv2d_570[0][0]', 'conv2d_571[0][0]', 'conv2d_572[0][0]', 'conv2d_573[0][0]', 'conv2d_574[0][0]', 'conv2d_575[0][0]', 'conv2d_576[0][0]', 'conv2d_577[0][0]', 'conv2d_578[0][0]', 'conv2d_579[0][0]', 'conv2d_580[0][0]', 'conv2d_581[0][0]', 'conv2d_582[0][0]', 'conv2d_583[0][0]', 'conv2d_584[0][0]', 'conv2d_585[0][0]', 'conv2d_586[0][0]', 'conv2d_587[0][0]', 'conv2d_588[0][0]', 'conv2d_589[0][0]'] batch_normalization_62 (Ba (None, 56, 56, 128) 512 ['concatenate_16[0][0]'] tchNormalization) re_lu_55 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_62[0][0]'] conv2d_590 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_55[0][0]'] conv2d_556 (Conv2D) (None, 56, 56, 256) 16384 ['max_pooling2d_3[0][0]'] batch_normalization_63 (Ba (None, 56, 56, 256) 1024 ['conv2d_590[0][0]'] tchNormalization) batch_normalization_60 (Ba (None, 56, 56, 256) 1024 ['conv2d_556[0][0]'] tchNormalization) add_16 (Add) (None, 56, 56, 256) 0 ['batch_normalization_63[0][0]', 'batch_normalization_60[0][0]'] re_lu_56 (ReLU) (None, 56, 56, 256) 0 ['add_16[0][0]'] conv2d_591 (Conv2D) (None, 56, 56, 128) 32768 ['re_lu_56[0][0]'] batch_normalization_64 (Ba (None, 56, 56, 128) 512 ['conv2d_591[0][0]'] tchNormalization) re_lu_57 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_64[0][0]'] lambda_546 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_547 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_548 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_549 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_550 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_551 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_552 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_553 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_554 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_555 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_556 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_557 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_558 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_559 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_560 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_561 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_562 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_563 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_564 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_565 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_566 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_567 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_568 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_569 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_570 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_571 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_572 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_573 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_574 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_575 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_576 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_577 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] conv2d_592 (Conv2D) (None, 56, 56, 4) 144 ['lambda_546[0][0]'] conv2d_593 (Conv2D) (None, 56, 56, 4) 144 ['lambda_547[0][0]'] conv2d_594 (Conv2D) (None, 56, 56, 4) 144 ['lambda_548[0][0]'] conv2d_595 (Conv2D) (None, 56, 56, 4) 144 ['lambda_549[0][0]'] conv2d_596 (Conv2D) (None, 56, 56, 4) 144 ['lambda_550[0][0]'] conv2d_597 (Conv2D) (None, 56, 56, 4) 144 ['lambda_551[0][0]'] conv2d_598 (Conv2D) (None, 56, 56, 4) 144 ['lambda_552[0][0]'] conv2d_599 (Conv2D) (None, 56, 56, 4) 144 ['lambda_553[0][0]'] conv2d_600 (Conv2D) (None, 56, 56, 4) 144 ['lambda_554[0][0]'] conv2d_601 (Conv2D) (None, 56, 56, 4) 144 ['lambda_555[0][0]'] conv2d_602 (Conv2D) (None, 56, 56, 4) 144 ['lambda_556[0][0]'] conv2d_603 (Conv2D) (None, 56, 56, 4) 144 ['lambda_557[0][0]'] conv2d_604 (Conv2D) (None, 56, 56, 4) 144 ['lambda_558[0][0]'] conv2d_605 (Conv2D) (None, 56, 56, 4) 144 ['lambda_559[0][0]'] conv2d_606 (Conv2D) (None, 56, 56, 4) 144 ['lambda_560[0][0]'] conv2d_607 (Conv2D) (None, 56, 56, 4) 144 ['lambda_561[0][0]'] conv2d_608 (Conv2D) (None, 56, 56, 4) 144 ['lambda_562[0][0]'] conv2d_609 (Conv2D) (None, 56, 56, 4) 144 ['lambda_563[0][0]'] conv2d_610 (Conv2D) (None, 56, 56, 4) 144 ['lambda_564[0][0]'] conv2d_611 (Conv2D) (None, 56, 56, 4) 144 ['lambda_565[0][0]'] conv2d_612 (Conv2D) (None, 56, 56, 4) 144 ['lambda_566[0][0]'] conv2d_613 (Conv2D) (None, 56, 56, 4) 144 ['lambda_567[0][0]'] conv2d_614 (Conv2D) (None, 56, 56, 4) 144 ['lambda_568[0][0]'] conv2d_615 (Conv2D) (None, 56, 56, 4) 144 ['lambda_569[0][0]'] conv2d_616 (Conv2D) (None, 56, 56, 4) 144 ['lambda_570[0][0]'] conv2d_617 (Conv2D) (None, 56, 56, 4) 144 ['lambda_571[0][0]'] conv2d_618 (Conv2D) (None, 56, 56, 4) 144 ['lambda_572[0][0]'] conv2d_619 (Conv2D) (None, 56, 56, 4) 144 ['lambda_573[0][0]'] conv2d_620 (Conv2D) (None, 56, 56, 4) 144 ['lambda_574[0][0]'] conv2d_621 (Conv2D) (None, 56, 56, 4) 144 ['lambda_575[0][0]'] conv2d_622 (Conv2D) (None, 56, 56, 4) 144 ['lambda_576[0][0]'] conv2d_623 (Conv2D) (None, 56, 56, 4) 144 ['lambda_577[0][0]'] concatenate_17 (Concatenat (None, 56, 56, 128) 0 ['conv2d_592[0][0]', e) 'conv2d_593[0][0]', 'conv2d_594[0][0]', 'conv2d_595[0][0]', 'conv2d_596[0][0]', 'conv2d_597[0][0]', 'conv2d_598[0][0]', 'conv2d_599[0][0]', 'conv2d_600[0][0]', 'conv2d_601[0][0]', 'conv2d_602[0][0]', 'conv2d_603[0][0]', 'conv2d_604[0][0]', 'conv2d_605[0][0]', 'conv2d_606[0][0]', 'conv2d_607[0][0]', 'conv2d_608[0][0]', 'conv2d_609[0][0]', 'conv2d_610[0][0]', 'conv2d_611[0][0]', 'conv2d_612[0][0]', 'conv2d_613[0][0]', 'conv2d_614[0][0]', 'conv2d_615[0][0]', 'conv2d_616[0][0]', 'conv2d_617[0][0]', 'conv2d_618[0][0]', 'conv2d_619[0][0]', 'conv2d_620[0][0]', 'conv2d_621[0][0]', 'conv2d_622[0][0]', 'conv2d_623[0][0]'] batch_normalization_65 (Ba (None, 56, 56, 128) 512 ['concatenate_17[0][0]'] tchNormalization) re_lu_58 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_65[0][0]']

打印的层中,有大量的lambda,对照源代码,lambda操作在分组卷积内,我们可以把这一堆lambda一直到下面的concatenate全部看作在做分组卷积,分组卷积并不改变通道数,只是简化参数量。

# 把summary输出到文件中,使用python脚本处理掉这堆lambda

# 打开文件

f = open('summary')

# 读取内容

content = f.read()

# 按换行切分

lines = content.split('\n')clean_lines = []

# 过滤处理

for line in lines:if len(line.strip()) == 0:continueif len(line) - len(line.strip()) == 78 or len(line) - len(line.strip()) == 79:# 去掉concatenate那一堆connect tocontinue if 'lambda' in line:continueclean_lines.append(line)

for line in clean_lines:print(line)

处理后的模型结构如下

Model: "model"

__________________________________________________________________________________________________Layer (type) Output Shape Param # Connected to

==================================================================================================input_4 (InputLayer) [(None, 224, 224, 3)] 0 []zero_padding2d_6 (ZeroPadd (None, 230, 230, 3) 0 ['input_4[0][0]']ing2D)conv2d_555 (Conv2D) (None, 112, 112, 64) 9472 ['zero_padding2d_6[0][0]']batch_normalization_59 (Ba (None, 112, 112, 64) 256 ['conv2d_555[0][0]']tchNormalization)re_lu_53 (ReLU) (None, 112, 112, 64) 0 ['batch_normalization_59[0][0]']zero_padding2d_7 (ZeroPadd (None, 114, 114, 64) 0 ['re_lu_53[0][0]']ing2D)max_pooling2d_3 (MaxPoolin (None, 56, 56, 64) 0 ['zero_padding2d_7[0][0]']g2D)conv2d_557 (Conv2D) (None, 56, 56, 128) 8192 ['max_pooling2d_3[0][0]']batch_normalization_61 (Ba (None, 56, 56, 128) 512 ['conv2d_557[0][0]']tchNormalization)re_lu_54 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_61[0][0]']concatenate_16 (Concatenat (None, 56, 56, 128) 0 ['conv2d_558[0][0]',e) 'conv2d_559[0][0]',batch_normalization_62 (Ba (None, 56, 56, 128) 512 ['concatenate_16[0][0]']tchNormalization)re_lu_55 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_62[0][0]']conv2d_590 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_55[0][0]']conv2d_556 (Conv2D) (None, 56, 56, 256) 16384 ['max_pooling2d_3[0][0]']batch_normalization_63 (Ba (None, 56, 56, 256) 1024 ['conv2d_590[0][0]']tchNormalization)batch_normalization_60 (Ba (None, 56, 56, 256) 1024 ['conv2d_556[0][0]']tchNormalization)add_16 (Add) (None, 56, 56, 256) 0 ['batch_normalization_63[0][0]','batch_normalization_60[0][0]']re_lu_56 (ReLU) (None, 56, 56, 256) 0 ['add_16[0][0]']conv2d_591 (Conv2D) (None, 56, 56, 128) 32768 ['re_lu_56[0][0]']batch_normalization_64 (Ba (None, 56, 56, 128) 512 ['conv2d_591[0][0]']tchNormalization)re_lu_57 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_64[0][0]']concatenate_17 (Concatenat (None, 56, 56, 128) 0 ['conv2d_592[0][0]',e) 'conv2d_593[0][0]',batch_normalization_65 (Ba (None, 56, 56, 128) 512 ['concatenate_17[0][0]']tchNormalization)re_lu_58 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_65[0][0]']conv2d_624 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_58[0][0]']batch_normalization_66 (Ba (None, 56, 56, 256) 1024 ['conv2d_624[0][0]']tchNormalization)add_17 (Add) (None, 56, 56, 256) 0 ['batch_normalization_66[0][0]','re_lu_56[0][0]']re_lu_59 (ReLU) (None, 56, 56, 256) 0 ['add_17[0][0]']conv2d_625 (Conv2D) (None, 56, 56, 128) 32768 ['re_lu_59[0][0]']batch_normalization_67 (Ba (None, 56, 56, 128) 512 ['conv2d_625[0][0]']tchNormalization)re_lu_60 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_67[0][0]']concatenate_18 (Concatenat (None, 56, 56, 128) 0 ['conv2d_626[0][0]',e) 'conv2d_627[0][0]',batch_normalization_68 (Ba (None, 56, 56, 128) 512 ['concatenate_18[0][0]']tchNormalization)re_lu_61 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_68[0][0]']conv2d_658 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_61[0][0]']batch_normalization_69 (Ba (None, 56, 56, 256) 1024 ['conv2d_658[0][0]']tchNormalization)add_18 (Add) (None, 56, 56, 256) 0 ['batch_normalization_69[0][0]','re_lu_59[0][0]']re_lu_62 (ReLU) (None, 56, 56, 256) 0 ['add_18[0][0]']conv2d_660 (Conv2D) (None, 56, 56, 256) 65536 ['re_lu_62[0][0]']batch_normalization_71 (Ba (None, 56, 56, 256) 1024 ['conv2d_660[0][0]']tchNormalization)re_lu_63 (ReLU) (None, 56, 56, 256) 0 ['batch_normalization_71[0][0]']concatenate_19 (Concatenat (None, 28, 28, 256) 0 ['conv2d_661[0][0]',e) 'conv2d_662[0][0]',batch_normalization_72 (Ba (None, 28, 28, 256) 1024 ['concatenate_19[0][0]']tchNormalization)re_lu_64 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_72[0][0]']conv2d_693 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_64[0][0]']conv2d_659 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_62[0][0]']batch_normalization_73 (Ba (None, 28, 28, 512) 2048 ['conv2d_693[0][0]']tchNormalization)batch_normalization_70 (Ba (None, 28, 28, 512) 2048 ['conv2d_659[0][0]']tchNormalization)add_19 (Add) (None, 28, 28, 512) 0 ['batch_normalization_73[0][0]','batch_normalization_70[0][0]']re_lu_65 (ReLU) (None, 28, 28, 512) 0 ['add_19[0][0]']conv2d_694 (Conv2D) (None, 28, 28, 256) 131072 ['re_lu_65[0][0]']batch_normalization_74 (Ba (None, 28, 28, 256) 1024 ['conv2d_694[0][0]']tchNormalization)re_lu_66 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_74[0][0]']concatenate_20 (Concatenat (None, 28, 28, 256) 0 ['conv2d_695[0][0]',e) 'conv2d_696[0][0]',batch_normalization_75 (Ba (None, 28, 28, 256) 1024 ['concatenate_20[0][0]']tchNormalization)re_lu_67 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_75[0][0]']conv2d_727 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_67[0][0]']batch_normalization_76 (Ba (None, 28, 28, 512) 2048 ['conv2d_727[0][0]']tchNormalization)add_20 (Add) (None, 28, 28, 512) 0 ['batch_normalization_76[0][0]','re_lu_65[0][0]']re_lu_68 (ReLU) (None, 28, 28, 512) 0 ['add_20[0][0]']conv2d_728 (Conv2D) (None, 28, 28, 256) 131072 ['re_lu_68[0][0]']batch_normalization_77 (Ba (None, 28, 28, 256) 1024 ['conv2d_728[0][0]']tchNormalization)re_lu_69 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_77[0][0]']concatenate_21 (Concatenat (None, 28, 28, 256) 0 ['conv2d_729[0][0]',e) 'conv2d_730[0][0]',batch_normalization_78 (Ba (None, 28, 28, 256) 1024 ['concatenate_21[0][0]']tchNormalization)re_lu_70 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_78[0][0]']conv2d_761 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_70[0][0]']batch_normalization_79 (Ba (None, 28, 28, 512) 2048 ['conv2d_761[0][0]']tchNormalization)add_21 (Add) (None, 28, 28, 512) 0 ['batch_normalization_79[0][0]','re_lu_68[0][0]']re_lu_71 (ReLU) (None, 28, 28, 512) 0 ['add_21[0][0]']conv2d_762 (Conv2D) (None, 28, 28, 256) 131072 ['re_lu_71[0][0]']batch_normalization_80 (Ba (None, 28, 28, 256) 1024 ['conv2d_762[0][0]']tchNormalization)re_lu_72 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_80[0][0]']concatenate_22 (Concatenat (None, 28, 28, 256) 0 ['conv2d_763[0][0]',e) 'conv2d_764[0][0]',batch_normalization_81 (Ba (None, 28, 28, 256) 1024 ['concatenate_22[0][0]']tchNormalization)re_lu_73 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_81[0][0]']conv2d_795 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_73[0][0]']batch_normalization_82 (Ba (None, 28, 28, 512) 2048 ['conv2d_795[0][0]']tchNormalization)add_22 (Add) (None, 28, 28, 512) 0 ['batch_normalization_82[0][0]','re_lu_71[0][0]']re_lu_74 (ReLU) (None, 28, 28, 512) 0 ['add_22[0][0]']conv2d_797 (Conv2D) (None, 28, 28, 512) 262144 ['re_lu_74[0][0]']batch_normalization_84 (Ba (None, 28, 28, 512) 2048 ['conv2d_797[0][0]']tchNormalization)re_lu_75 (ReLU) (None, 28, 28, 512) 0 ['batch_normalization_84[0][0]']concatenate_23 (Concatenat (None, 14, 14, 512) 0 ['conv2d_798[0][0]',e) 'conv2d_799[0][0]',batch_normalization_85 (Ba (None, 14, 14, 512) 2048 ['concatenate_23[0][0]']tchNormalization)re_lu_76 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_85[0][0]']conv2d_830 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_76[0][0]']conv2d_796 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_74[0][0]']batch_normalization_86 (Ba (None, 14, 14, 1024) 4096 ['conv2d_830[0][0]']tchNormalization)batch_normalization_83 (Ba (None, 14, 14, 1024) 4096 ['conv2d_796[0][0]']tchNormalization)add_23 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_86[0][0]','batch_normalization_83[0][0]']re_lu_77 (ReLU) (None, 14, 14, 1024) 0 ['add_23[0][0]']conv2d_831 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_77[0][0]']batch_normalization_87 (Ba (None, 14, 14, 512) 2048 ['conv2d_831[0][0]']tchNormalization)re_lu_78 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_87[0][0]']concatenate_24 (Concatenat (None, 14, 14, 512) 0 ['conv2d_832[0][0]',e) 'conv2d_833[0][0]',batch_normalization_88 (Ba (None, 14, 14, 512) 2048 ['concatenate_24[0][0]']tchNormalization)re_lu_79 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_88[0][0]']conv2d_864 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_79[0][0]']batch_normalization_89 (Ba (None, 14, 14, 1024) 4096 ['conv2d_864[0][0]']tchNormalization)add_24 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_89[0][0]','re_lu_77[0][0]']re_lu_80 (ReLU) (None, 14, 14, 1024) 0 ['add_24[0][0]']conv2d_865 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_80[0][0]']batch_normalization_90 (Ba (None, 14, 14, 512) 2048 ['conv2d_865[0][0]']tchNormalization)re_lu_81 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_90[0][0]']concatenate_25 (Concatenat (None, 14, 14, 512) 0 ['conv2d_866[0][0]',e) 'conv2d_867[0][0]',batch_normalization_91 (Ba (None, 14, 14, 512) 2048 ['concatenate_25[0][0]']tchNormalization)re_lu_82 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_91[0][0]']conv2d_898 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_82[0][0]']batch_normalization_92 (Ba (None, 14, 14, 1024) 4096 ['conv2d_898[0][0]']tchNormalization)add_25 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_92[0][0]','re_lu_80[0][0]']re_lu_83 (ReLU) (None, 14, 14, 1024) 0 ['add_25[0][0]']conv2d_899 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_83[0][0]']batch_normalization_93 (Ba (None, 14, 14, 512) 2048 ['conv2d_899[0][0]']tchNormalization)re_lu_84 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_93[0][0]']concatenate_26 (Concatenat (None, 14, 14, 512) 0 ['conv2d_900[0][0]',e) 'conv2d_901[0][0]',batch_normalization_94 (Ba (None, 14, 14, 512) 2048 ['concatenate_26[0][0]']tchNormalization)re_lu_85 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_94[0][0]']conv2d_932 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_85[0][0]']batch_normalization_95 (Ba (None, 14, 14, 1024) 4096 ['conv2d_932[0][0]']tchNormalization)add_26 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_95[0][0]','re_lu_83[0][0]']re_lu_86 (ReLU) (None, 14, 14, 1024) 0 ['add_26[0][0]']conv2d_933 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_86[0][0]']batch_normalization_96 (Ba (None, 14, 14, 512) 2048 ['conv2d_933[0][0]']tchNormalization)re_lu_87 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_96[0][0]']concatenate_27 (Concatenat (None, 14, 14, 512) 0 ['conv2d_934[0][0]',e) 'conv2d_935[0][0]',batch_normalization_97 (Ba (None, 14, 14, 512) 2048 ['concatenate_27[0][0]']tchNormalization)re_lu_88 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_97[0][0]']conv2d_966 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_88[0][0]']batch_normalization_98 (Ba (None, 14, 14, 1024) 4096 ['conv2d_966[0][0]']tchNormalization)add_27 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_98[0][0]','re_lu_86[0][0]']re_lu_89 (ReLU) (None, 14, 14, 1024) 0 ['add_27[0][0]']conv2d_967 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_89[0][0]']batch_normalization_99 (Ba (None, 14, 14, 512) 2048 ['conv2d_967[0][0]']tchNormalization)re_lu_90 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_99[0][0]']concatenate_28 (Concatenat (None, 14, 14, 512) 0 ['conv2d_968[0][0]',e) 'conv2d_969[0][0]',batch_normalization_100 (B (None, 14, 14, 512) 2048 ['concatenate_28[0][0]']atchNormalization)re_lu_91 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_100[0][0]']conv2d_1000 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_91[0][0]']batch_normalization_101 (B (None, 14, 14, 1024) 4096 ['conv2d_1000[0][0]']atchNormalization)add_28 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_101[0][0]','re_lu_89[0][0]']re_lu_92 (ReLU) (None, 14, 14, 1024) 0 ['add_28[0][0]']conv2d_1002 (Conv2D) (None, 14, 14, 1024) 1048576 ['re_lu_92[0][0]']batch_normalization_103 (B (None, 14, 14, 1024) 4096 ['conv2d_1002[0][0]']atchNormalization)re_lu_93 (ReLU) (None, 14, 14, 1024) 0 ['batch_normalization_103[0][0]']concatenate_29 (Concatenat (None, 7, 7, 1024) 0 ['conv2d_1003[0][0]',e) 'conv2d_1004[0][0]',batch_normalization_104 (B (None, 7, 7, 1024) 4096 ['concatenate_29[0][0]']atchNormalization)re_lu_94 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_104[0][0]']conv2d_1035 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_94[0][0]']conv2d_1001 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_92[0][0]']batch_normalization_105 (B (None, 7, 7, 2048) 8192 ['conv2d_1035[0][0]']atchNormalization)batch_normalization_102 (B (None, 7, 7, 2048) 8192 ['conv2d_1001[0][0]']atchNormalization)add_29 (Add) (None, 7, 7, 2048) 0 ['batch_normalization_105[0][0]','batch_normalization_102[0][0]']re_lu_95 (ReLU) (None, 7, 7, 2048) 0 ['add_29[0][0]']conv2d_1036 (Conv2D) (None, 7, 7, 1024) 2097152 ['re_lu_95[0][0]']batch_normalization_106 (B (None, 7, 7, 1024) 4096 ['conv2d_1036[0][0]']atchNormalization)re_lu_96 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_106[0][0]']concatenate_30 (Concatenat (None, 7, 7, 1024) 0 ['conv2d_1037[0][0]',e) 'conv2d_1038[0][0]',batch_normalization_107 (B (None, 7, 7, 1024) 4096 ['concatenate_30[0][0]']atchNormalization)re_lu_97 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_107[0][0]']conv2d_1069 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_97[0][0]']batch_normalization_108 (B (None, 7, 7, 2048) 8192 ['conv2d_1069[0][0]']atchNormalization)add_30 (Add) (None, 7, 7, 2048) 0 ['batch_normalization_108[0][0]','re_lu_95[0][0]']re_lu_98 (ReLU) (None, 7, 7, 2048) 0 ['add_30[0][0]']conv2d_1070 (Conv2D) (None, 7, 7, 1024) 2097152 ['re_lu_98[0][0]']batch_normalization_109 (B (None, 7, 7, 1024) 4096 ['conv2d_1070[0][0]']atchNormalization)re_lu_99 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_109[0][0]']concatenate_31 (Concatenat (None, 7, 7, 1024) 0 ['conv2d_1071[0][0]',e) 'conv2d_1072[0][0]',batch_normalization_110 (B (None, 7, 7, 1024) 4096 ['concatenate_31[0][0]']atchNormalization)re_lu_100 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_110[0][0]']conv2d_1103 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_100[0][0]']batch_normalization_111 (B (None, 7, 7, 2048) 8192 ['conv2d_1103[0][0]']atchNormalization)add_31 (Add) (None, 7, 7, 2048) 0 ['batch_normalization_111[0][0]','re_lu_98[0][0]']re_lu_101 (ReLU) (None, 7, 7, 2048) 0 ['add_31[0][0]']global_average_pooling2d_1 (None, 2048) 0 ['re_lu_101[0][0]'](GlobalAveragePooling2D)dense_1 (Dense) (None, 1000) 2049000 ['global_average_pooling2d_1[0][0]']

观察Add的connected to,发现全都是一样的,并没有出现不一致的情况,竟然和我想的不一样,并没有使用什么广播机制。仔细观察模型的过程才发现,stack的block中,x和filters通道不一致,此时如果直接相加会报错,所以第一个block做了一个通道数*2的卷积。由于后续的filters没有变,输出的通道都是filters*2,所以也可以直接相加。

![[NOI2000]单词查找树](https://img-blog.csdnimg.cn/direct/0f062c324a884b9da3c404838c16563a.png)