背景介绍

我微博玩的晚,同学里面加上好友的也就40不到,为了把那些隐藏的好友揪出来。用scrapy写一个爬虫试一试。

思路

微博上面关注和粉丝都是公开的数据,可以用爬虫获取到的。而一个好友圈子里面的人,相互粉的比例也会比较大。这就是找到隐藏的好友的一个切入点。于是思路如下:

- 从自己的账号入手,先抓取自己关注的人和自己的粉丝(0级好友)

- 从第一批抓的数据开始,继续爬取0级好友的关注人和粉丝

- 在爬取的数据中分析他们的网络关系,找到可能是自己好友的人

遇到的问题

- 爬取的数据量需要控制,每级迭代,用户的数量都是爆炸式的增长,需要进行一定筛选

- 爬取的速度的限制,我的破电脑实测爬取速度大概在17page/min

- 小心大V账号的陷阱,在爬取人物关系的时候,一定要把大V的账号剔除掉!,粉丝页每页只能显示10人左右,有的大V一个人就百万粉丝,爬虫就卡在这一个号里面出不来了。

分析了这些,下面就开始搞了

具体实现

非常幸运地在网上别人搞好的一个爬取微博数据的框架,可以拿来改一改,这个框架是包含爬取微博内容,评论,用户信息,用户关系的。微博内容和评论我是不需要的,我就拿来改成只爬取用户信息和关系的就可以了。

我的主要改动如下:

- 删除爬取评论和微博的部分

- 将爬取的起始id变成从json文件读取(为了动态指定爬取范围)

- 新增加一个只爬取用户信息的爬虫,提高效率(原有爬虫专门爬取用户关系)

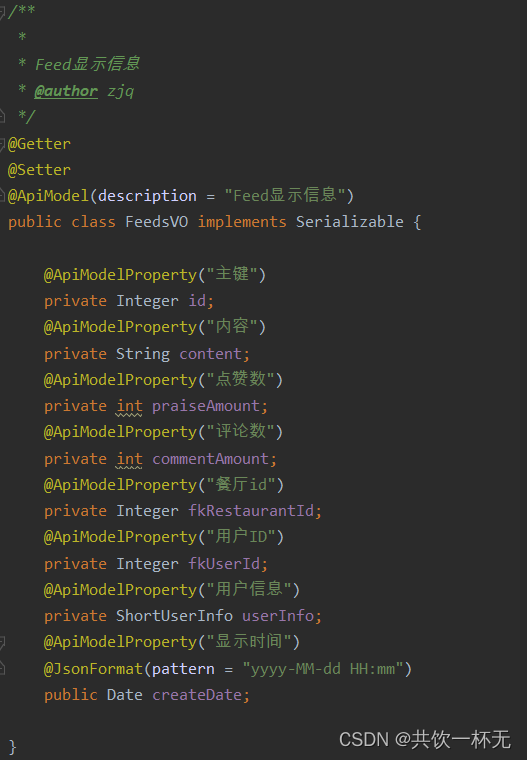

整个项目结构是:用scrapy作为爬虫的框架,有两个爬虫,weibo_spider.py用来爬取用户关系;weibo_userinfo用来爬取用户资料。爬取的数据存在mongodb中。另外有一个程序spider_control.py专门指定爬取的id的范围,将待爬取的id存在start_uids.json文件中,两个爬虫文件从这个json文件指定爬取的范围。

爬取用户关系的weibo_spider.py代码如下:

#!/usr/bin/env python

# # encoding: utf-8

import re

from lxml import etree

from scrapy import Spider

from scrapy.crawler import CrawlerProcess

from scrapy.selector import Selector

from scrapy.http import Request

from scrapy.utils.project import get_project_settings

from sina.items import TweetsItem, InformationItem, RelationshipsItem, CommentItem

from sina.spiders.utils import time_fix, extract_weibo_content, extract_comment_content

import time

import jsonclass WeiboSpider(Spider):name = "weibo_spider"base_url = "https://weibo.cn"def start_requests(self):with open('start_uids.json','r') as f:data = json.load(f)start_uids = data["start_uids"]for uid in start_uids:yield Request(url="https://weibo.cn/%s/info" % uid, callback=self.parse_information)def parse_information(self, response):""" 抓取个人信息 """information_item = InformationItem()information_item['crawl_time'] = int(time.time())selector = Selector(response)information_item['_id'] = re.findall('(\d+)/info', response.url)[0]text1 = ";".join(selector.xpath('body/div[@class="c"]//text()').extract()) # 获取标签里的所有text()nick_name = re.findall('昵称;?[::]?(.*?);', text1)gender = re.findall('性别;?[::]?(.*?);', text1)place = re.findall('地区;?[::]?(.*?);', text1)briefIntroduction = re.findall('简介;?[::]?(.*?);', text1)birthday = re.findall('生日;?[::]?(.*?);', text1)sex_orientation = re.findall('性取向;?[::]?(.*?);', text1)sentiment = re.findall('感情状况;?[::]?(.*?);', text1)vip_level = re.findall('会员等级;?[::]?(.*?);', text1)authentication = re.findall('认证;?[::]?(.*?);', text1)labels = re.findall('标签;?[::]?(.*?)更多>>', text1)if nick_name and nick_name[0]:information_item["nick_name"] = nick_name[0].replace(u"\xa0", "")if gender and gender[0]:information_item["gender"] = gender[0].replace(u"\xa0", "")if place and place[0]:place = place[0].replace(u"\xa0", "").split(" ")information_item["province"] = place[0]if len(place) > 1:information_item["city"] = place[1]if briefIntroduction and briefIntroduction[0]:information_item["brief_introduction"] = briefIntroduction[0].replace(u"\xa0", "")if birthday and birthday[0]:information_item['birthday'] = birthday[0]if sex_orientation and sex_orientation[0]:if sex_orientation[0].replace(u"\xa0", "") == gender[0]:information_item["sex_orientation"] = "同性恋"else:information_item["sex_orientation"] = "异性恋"if sentiment and sentiment[0]:information_item["sentiment"] = sentiment[0].replace(u"\xa0", "")if vip_level and vip_level[0]:information_item["vip_level"] = vip_level[0].replace(u"\xa0", "")if authentication and authentication[0]:information_item["authentication"] = authentication[0].replace(u"\xa0", "")if labels and labels[0]:information_item["labels"] = labels[0].replace(u"\xa0", ",").replace(';', '').strip(',')request_meta = response.metarequest_meta['item'] = information_itemyield Request(self.base_url + '/u/{}'.format(information_item['_id']),callback=self.parse_further_information,meta=request_meta, dont_filter=True, priority=1)def parse_further_information(self, response):text = response.textinformation_item = response.meta['item']tweets_num = re.findall('微博\[(\d+)\]', text)if tweets_num:information_item['tweets_num'] = int(tweets_num[0])follows_num = re.findall('关注\[(\d+)\]', text)if follows_num:information_item['follows_num'] = int(follows_num[0])fans_num = re.findall('粉丝\[(\d+)\]', text)if fans_num:information_item['fans_num'] = int(fans_num[0])request_meta = response.metarequest_meta['item'] = information_itemyield information_item# 获取关注列表yield Request(url=self.base_url + '/{}/follow?page=1'.format(information_item['_id']),callback=self.parse_follow,meta=request_meta,dont_filter=True)# 获取粉丝列表yield Request(url=self.base_url + '/{}/fans?page=1'.format(information_item['_id']),callback=self.parse_fans,meta=request_meta,dont_filter=True)def parse_follow(self, response):"""抓取关注列表"""# 如果是第1页,一次性获取后面的所有页if response.url.endswith('page=1'):all_page = re.search(r'/> 1/(\d+)页</div>', response.text)if all_page:all_page = all_page.group(1)all_page = int(all_page)for page_num in range(2, all_page + 1):page_url = response.url.replace('page=1', 'page={}'.format(page_num))yield Request(page_url, self.parse_follow, dont_filter=True, meta=response.meta)selector = Selector(response)id_username_pair = re.findall('<a href="https://weibo.cn/u/(\d+)">(.{0,30})</a>',response.text)# urls = selector.xpath('//a[text()="关注他" or text()="关注她" or text()="取消关注"]/@href').extract()# uids = re.findall('uid=(\d+)', ";".join(urls), re.S)uids = [item[0] for item in id_username_pair]followed_names = [item[1] for item in id_username_pair]ID = re.findall('(\d+)/follow', response.url)[0]for uid, followed_name in zip(uids,followed_names):relationships_item = RelationshipsItem()relationships_item['crawl_time'] = int(time.time())relationships_item["fan_id"] = IDrelationships_item["followed_id"] = uidrelationships_item["_id"] = ID + '-' + uidrelationships_item['fan_name'] = response.meta['item']['nick_name']relationships_item['followed_name'] = followed_nameyield relationships_itemdef parse_fans(self, response):"""抓取粉丝列表"""# 如果是第1页,一次性获取后面的所有页if response.url.endswith('page=1'):all_page = re.search(r'/> 1/(\d+)页</div>', response.text)if all_page:all_page = all_page.group(1)all_page = int(all_page)for page_num in range(2, all_page + 1):page_url = response.url.replace('page=1', 'page={}'.format(page_num))yield Request(page_url, self.parse_fans, dont_filter=True, meta=response.meta)selector = Selector(response)id_username_pair = re.findall('<a href="https://weibo.cn/u/(\d+)">(.{0,30})</a>', response.text)# urls = selector.xpath('//a[text()="关注他" or text()="关注她" or text()="取消关注"]/@href').extract()# uids = re.findall('uid=(\d+)', ";".join(urls), re.S)uids = [item[0] for item in id_username_pair]fan_names = [item[1] for item in id_username_pair]ID = re.findall('(\d+)/fans', response.url)[0]for uid, fan_name in zip(uids,fan_names):relationships_item = RelationshipsItem()relationships_item['crawl_time'] = int(time.time())relationships_item["fan_id"] = uidrelationships_item["followed_id"] = IDrelationships_item["_id"] = uid + '-' + IDrelationships_item['fan_name'] = fan_namerelationships_item['followed_name'] = response.meta['item']['nick_name']yield relationships_itemif __name__ == "__main__":process = CrawlerProcess(get_project_settings())process.crawl('weibo_spider')process.start()只爬取用户资料的weibo_userinfo.py代码如下:

# -*- coding: utf-8 -*-

import re

from lxml import etree

from scrapy import Spider

from scrapy.crawler import CrawlerProcess

from scrapy.selector import Selector

from scrapy.http import Request

from scrapy.utils.project import get_project_settings

from sina.items import TweetsItem, InformationItem, RelationshipsItem, CommentItem

from sina.spiders.utils import time_fix, extract_weibo_content, extract_comment_content

import time

import jsonclass WeiboUserinfoSpider(Spider):name = "weibo_userinfo"base_url = "https://weibo.cn"def start_requests(self):with open('start_uids.json', 'r') as f:data = json.load(f)start_uids = data["start_uids"]# start_uids = [# '6505979820', # 我# #'1699432410' # 新华社# ]for uid in start_uids:yield Request(url="https://weibo.cn/%s/info" % uid, callback=self.parse_information)def parse_information(self, response):""" 抓取个人信息 """information_item = InformationItem()information_item['crawl_time'] = int(time.time())selector = Selector(response)information_item['_id'] = re.findall('(\d+)/info', response.url)[0]text1 = ";".join(selector.xpath('body/div[@class="c"]//text()').extract()) # 获取标签里的所有text()nick_name = re.findall('昵称;?[::]?(.*?);', text1)gender = re.findall('性别;?[::]?(.*?);', text1)place = re.findall('地区;?[::]?(.*?);', text1)briefIntroduction = re.findall('简介;?[::]?(.*?);', text1)birthday = re.findall('生日;?[::]?(.*?);', text1)sex_orientation = re.findall('性取向;?[::]?(.*?);', text1)sentiment = re.findall('感情状况;?[::]?(.*?);', text1)vip_level = re.findall('会员等级;?[::]?(.*?);', text1)authentication = re.findall('认证;?[::]?(.*?);', text1)labels = re.findall('标签;?[::]?(.*?)更多>>', text1)if nick_name and nick_name[0]:information_item["nick_name"] = nick_name[0].replace(u"\xa0", "")if gender and gender[0]:information_item["gender"] = gender[0].replace(u"\xa0", "")if place and place[0]:place = place[0].replace(u"\xa0", "").split(" ")information_item["province"] = place[0]if len(place) > 1:information_item["city"] = place[1]if briefIntroduction and briefIntroduction[0]:information_item["brief_introduction"] = briefIntroduction[0].replace(u"\xa0", "")if birthday and birthday[0]:information_item['birthday'] = birthday[0]if sex_orientation and sex_orientation[0]:if sex_orientation[0].replace(u"\xa0", "") == gender[0]:information_item["sex_orientation"] = "同性恋"else:information_item["sex_orientation"] = "异性恋"if sentiment and sentiment[0]:information_item["sentiment"] = sentiment[0].replace(u"\xa0", "")if vip_level and vip_level[0]:information_item["vip_level"] = vip_level[0].replace(u"\xa0", "")if authentication and authentication[0]:information_item["authentication"] = authentication[0].replace(u"\xa0", "")if labels and labels[0]:information_item["labels"] = labels[0].replace(u"\xa0", ",").replace(';', '').strip(',')request_meta = response.metarequest_meta['item'] = information_itemyield Request(self.base_url + '/u/{}'.format(information_item['_id']),callback=self.parse_further_information,meta=request_meta, dont_filter=True, priority=1)def parse_further_information(self, response):text = response.textinformation_item = response.meta['item']tweets_num = re.findall('微博\[(\d+)\]', text)if tweets_num:information_item['tweets_num'] = int(tweets_num[0])follows_num = re.findall('关注\[(\d+)\]', text)if follows_num:information_item['follows_num'] = int(follows_num[0])fans_num = re.findall('粉丝\[(\d+)\]', text)if fans_num:information_item['fans_num'] = int(fans_num[0])request_meta = response.metarequest_meta['item'] = information_itemyield information_itemif __name__ == "__main__":process = CrawlerProcess(get_project_settings())process.crawl('weibo_userinfo')process.start()除此之外,另外用一个程序专门指定爬取的范围

import os

import json

import pymongoclass SpiderControl:def __init__(self, init_uid):self.init_uid = init_uidself.set_uid([self.init_uid])self.dbclient = pymongo.MongoClient(host='localhost', port=27017)self.db = self.dbclient['Sina']self.info_collection = self.db['Information']self.relation_collection = self.db['Relationships']self.label_collection = self.db['Label']def set_uid(self, uid_list):uid_data = {'start_uids': uid_list}with open('start_uids.json','w') as f:json.dump(uid_data, f)def add_filter(list1,list2):return list(set(list1+list2))if __name__ == '__main__':init_id = '6505979820'sc = SpiderControl(init_id)try:sc.label_collection.insert({'_id':init_id,'label':'center'})except:print('已有该数据')# 这里第一次运行weibo_spider,第一次爬取粉丝和关注info_filter = [init_id] # 标记已经爬过的idrelation_filter = [init_id] # 标记已经爬过的idcondition_fan0 = {'followed_id':init_id} # 主人公的粉丝condition_follow0 = {'fan_id':init_id} # 主人公的关注fan0_id = [r['fan_id'] for r in sc.relation_collection.find(condition_fan0)]follow0_id = [r['followed_id'] for r in sc.relation_collection.find(condition_follow0)]# 打标签for id in follow0_id:try:sc.label_collection.insert_one({'_id':id,'label':'follow0'}) # 没有就添加except:sc.label_collection.update_one({'_id':id},{'$set':{'label':'follow0'}}) # 有就更新for id in fan0_id:try:sc.label_collection.insert_one({'_id':id,'label':'fan0'})except:sc.label_collection.update_one({'_id':id},{'$set':{'label':'fan0'}})fans_follows0 = list(set(fan0_id) | set(follow0_id)) # 取关注和粉丝的并集# 运行爬虫weibo_userinfo获得粉丝和关注用户的信息info_filter = add_filter(info_filter, fans_follows0)condition_famous = {'fans_num':{'$gt':500}} # 选取粉丝小于500的账号famous_id0 = [r['_id'] for r in sc.info_collection.find(condition_famous)]relation_filter = add_filter(relation_filter, famous_id0)uid_set = list(set(fans_follows0) - set(relation_filter))print('id数量{}'.format(len(uid_set)))sc.set_uid(uid_set)# 运行爬虫weibo_spider获得这些人的粉丝关注网络condition_fan1 = {'followed_id': {'$in':fans_follows0}} # 一级好友的粉丝condition_follow1 = {'fan_id': {'$in':fans_follows0}} # 一级好友的关注fan1_id = [r['fan_id'] for r in sc.relation_collection.find(condition_fan1)]follow1_id = [r['followed_id'] for r in sc.relation_collection.find(condition_follow1)]fan1_id = list(set(fan1_id)-set(fans_follows0)) # 去除0级好友follow1_id = list(set(follow1_id) - set(fans_follows0)) # 去除0级好友# 打标签for id in follow1_id:try:sc.label_collection.insert_one({'_id': id, 'label': 'follow1'}) # 没有就添加except:sc.label_collection.update_one({'_id': id}, {'$set': {'label': 'follow1'}}) # 有就更新for id in fan1_id:try:sc.label_collection.insert_one({'_id': id, 'label': 'fan1'})except:sc.label_collection.update_one({'_id': id}, {'$set': {'label': 'fan1'}})fans_follows1 = list(set(fan1_id) & set(follow1_id)) # 取关注和粉丝的交集uid_set = list(set(fans_follows1) - set(info_filter))print('id数量{}'.format(len(uid_set)))sc.set_uid(uid_set)# 运行spider_userinfoinfo_filter = add_filter(info_filter, fans_follows1)运行情况

第一次爬取,筛选掉大V后(粉丝大于500)有60个账号。在这60个里面再运行一次,爬取人物关系,这60个号总共有3800左右的粉丝和4300左右的关注数,取并集大概6400个账号,先从这些账号里面分析一波

可视化使用pyecharts

先出一张图

??!!woc这密密麻麻的什么玩意,浏览器都要卡成狗了

赶紧缩小一波数据,在关注和粉丝里面取交集,把数据量缩小到800

这回就舒服多了,其中,不同颜色代表了不同的类别(我分的)。

不同的颜色分别对应了:

- 我(红色)

- 我的关注

- 我的粉丝

- 我的好友(我的关注+我的粉丝)的粉丝和关注

- 大V

通过找到和我好友连接比较多的账号,就可以找到隐藏好友了

举个例子

这个选中的是qqd,很显然和我的好友有大量的关联,与这些泡泡相连的大概率就是我的同学,比如这位:

果然这样就发现了好多我的同学哈哈哈。

代码写的贼乱,反正能跑了(狗头保命),我放到GitHub上了:https://github.com/buaalzm/WeiboSpider/tree/relation_spider